量化金融与金融编程

L05 数据导入与齐整 | 课前预习

曾永艺

厦门大学管理学院

2023-10-25

问渠那得清如许?为有源头活水来。——[宋]朱熹

问渠那得清如许?为有源头活水来。——[宋]朱熹

Like families, tidy datasets are all alike but every messy

dataset is messy in its own way. ——Hadley Wickham

问渠那得清如许?为有源头活水来。——[宋]朱熹

Like families, tidy datasets are all alike but every messy

dataset is messy in its own way. ——Hadley Wickham

1. 数据导入

- 读入矩形文本数据:

readr包 2.1.4 - 读入其它类型的数据

问渠那得清如许?为有源头活水来。——[宋]朱熹

Like families, tidy datasets are all alike but every messy

dataset is messy in its own way. ——Hadley Wickham

1. 数据导入

- 读入矩形文本数据:

readr包 2.1.4 - 读入其它类型的数据

2. 数据齐整

- 齐整数据

tidyr包 1.3.0pivot_longer()和pivot_wider()separate_*()和unite()pivot_*()和separate_*():一个复杂案例unnest_*()和hoist()

1. 数据导入

(Data Import)

>> 读入文本文档:readr 包

☑ 一致的函数命名方式

read_csv() | read_csv2() | read_tsv() | read_delim() |read_table() | read_fwf() |read_lines() | read_lines_raw() | read_file() | read_file_raw() | read_log() |>> 读入文本文档:readr 包

☑ 一致的函数命名方式

read_csv() | read_csv2() | read_tsv() | read_delim() |read_table() | read_fwf() |read_lines() | read_lines_raw() | read_file() | read_file_raw() | read_log() |☑ 基本一致的参数设置

read_*(file, # delim = NULL, escape_backslash = FALSE, escape_double = TRUE, col_names = TRUE, col_types = NULL, col_select = NULL, id = NULL, locale = default_locale(), na = c("", "NA"), quoted_na = TRUE, quote = "\"", comment = "", trim_ws = TRUE, skip = 0, n_max = Inf, guess_max = min(1000, n_max), name_repair = "unique", num_threads = readr_threads(), progress = show_progress(), show_col_types = should_show_types(), skip_empty_rows = TRUE, lazy = should_read_lazy())# file: Either a path to a file, a connection, or literal data. # Files ending in .gz, .bz2, .xz, or .zip will be automatically uncompressed.# Files starting with http://, https://, ftp://, or ftps:// will be downloaded.# Using a value of clipboard() will read from the system clipboard.>> 读入文本文档:readr 包

☑ 和内置 utils 包中 read.*() 比较

>> 读入文本文档:readr 包

☑ 和内置 utils 包中 read.*() 比较

生成

tibble*(更确切地说应该是spec_tbl_df)默认情况下做得更少,如不会自动将字符型变量转换为因子型变量(

read.*()有个stringsAsFactors参数)、不会自动更改列名、不会将一列数据转化为行名等较少依赖系统和环境变量,结果更具可重现性(reproducible)

读入速度通常更快(~50x),且读入大文件时有进度条提示

>> 读入文本文档:readr 包

☑ 和内置 utils 包中 read.*() 比较

生成

tibble*(更确切地说应该是spec_tbl_df)默认情况下做得更少,如不会自动将字符型变量转换为因子型变量(

read.*()有个stringsAsFactors参数)、不会自动更改列名、不会将一列数据转化为行名等较少依赖系统和环境变量,结果更具可重现性(reproducible)

读入速度通常更快(~50x),且读入大文件时有进度条提示

?`tbl_df-class`tibble: a subclass of data.frame and the central data structure for the tidyverselazy and surly - do less and complain more than base data.frames.======================================================================================1. 列数据不进行自动类型转换(如字符->因子),且原生直接支持列表列2. 只对长度为1的“标量”向量进行循环操作3. tibble支持不合法的数据变量名,如 tibble(`:)` = "smile")4. 不增加行名,也不鼓励使用行名来存储数据信息5. 用 [ 对tibble取子集操作总是返回新的tibble,[[ 和 $ 总是返回向量,且 $ 不支持变量名部分匹配6. tibble在屏幕上打印输出时会适应屏幕大小,并提供更多有用信息(类似str())>> 读入文本文档:readr 包

常用参数示例:read_csv()

>> 读入文本文档:readr 包

常用参数示例:read_csv()

library(tidyverse)read_csv("The 1st line of metadata The 2nd line of metadata # A comment to skip x,y,z 1,2,3", skip = 2, comment = "#")#> Rows: 1 Columns: 3#> ── Column specification ────────────────#> Delimiter: ","#> dbl (3): x, y, z#> #> ℹ Use `spec()` to retrieve the full column specification for this data.#> ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.#> # A tibble: 1 × 3#> x y z#> <dbl> <dbl> <dbl>#> 1 1 2 3>> 读入文本文档:readr 包

常用参数示例:read_csv()

library(tidyverse)read_csv("The 1st line of metadata The 2nd line of metadata # A comment to skip x,y,z 1,2,3", skip = 2, comment = "#")#> Rows: 1 Columns: 3#> ── Column specification ────────────────#> Delimiter: ","#> dbl (3): x, y, z#> #> ℹ Use `spec()` to retrieve the full column specification for this data.#> ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.#> # A tibble: 1 × 3#> x y z#> <dbl> <dbl> <dbl>#> 1 1 2 3read_csv("1,2,3\n4,5,6")#> # A tibble: 1 × 3#> `1` `2` `3`#> <dbl> <dbl> <dbl>#> 1 4 5 6read_csv("1,2,3\n4,5,6", col_names = FALSE)#> # A tibble: 2 × 3#> X1 X2 X3#> <dbl> <dbl> <dbl>#> 1 1 2 3#> 2 4 5 6read_csv("1,2,3\n4,5,6", col_names = c("x", "y", "z"))#> # A tibble: 2 × 3#> x y z#> <dbl> <dbl> <dbl>#> 1 1 2 3#> 2 4 5 6>> 读入文本文档:readr 包

readr 包采用启发式策略来解析文本文档中数据

# 生成示例数据文档set.seed(123456) # 设定随机数种子nycflights13::flights %>% mutate(dep_delay = if_else(dep_delay <= 0, FALSE, TRUE)) %>% select(month:dep_time, dep_delay, tailnum, time_hour) %>% slice_sample(n = 20) %>% mutate(across(everything(), ~ifelse(runif(20) <= 0.1, NA, .))) %>% mutate(time_hour = lubridate::as_datetime(time_hour)) %>% print(n = 5) %>% write_csv(file = "data/ex_flights.csv", na = "--") # 默认na = "NA"#> # A tibble: 20 × 6#> month day dep_time dep_delay tailnum time_hour #> <int> <int> <int> <lgl> <chr> <dttm> #> 1 6 26 932 TRUE <NA> 2013-06-26 12:00:00#> 2 NA 5 NA NA <NA> 2013-12-05 17:00:00#> 3 7 20 656 FALSE N17245 2013-07-20 10:00:00#> 4 5 16 NA FALSE N27152 2013-05-16 12:00:00#> 5 12 23 NA NA <NA> 2013-12-23 23:00:00#> # ℹ 15 more rows>> 读入文本文档:readr 包

readr 包采用启发式策略来解析文本文档中数据

(ex_flights <- read_csv("data/ex_flights.csv")) # 默认na = c("", "NA")#> Rows: 20 Columns: 6#> ── Column specification ──────────────────────────────────────────────────────────────────#> Delimiter: ","#> chr (6): month, day, dep_time, dep_delay, tailnum, time_hour#> #> ℹ Use `spec()` to retrieve the full column specification for this data.#> ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.#> # A tibble: 20 × 6#> month day dep_time dep_delay tailnum time_hour #> <chr> <chr> <chr> <chr> <chr> <chr> #> 1 6 26 932 TRUE -- 2013-06-26T12:00:00Z#> 2 -- 5 -- -- -- 2013-12-05T17:00:00Z#> 3 7 20 656 FALSE N17245 2013-07-20T10:00:00Z#> 4 5 16 -- FALSE N27152 2013-05-16T12:00:00Z#> 5 12 23 -- -- -- 2013-12-23T23:00:00Z#> # ℹ 15 more rows>> 读入文本文档:readr 包

readr 包采用启发式策略来解析文本文档中数据

1. 根据给定或猜测的分隔符将文本文档分割为字符串矩阵

2. 确定每列字符串向量的类型

2.1 由col_types参数直接给定

2.2 猜测:读入文档guess_max行(v2 vs. v1),并按如下顺序猜测每列变量的类型:logical ->integer ->double ->number ->time -> date -> date-time -> character

3. 将每列字符串解析为相应类型的向量

>> 读入文本文档:readr 包

readr 包采用启发式策略来解析文本文档中数据

1. 根据给定或猜测的分隔符将文本文档分割为字符串矩阵

2. 确定每列字符串向量的类型

2.1 由col_types参数直接给定

2.2 猜测:读入文档guess_max行(v2 vs. v1),并按如下顺序猜测每列变量的类型:logical ->integer ->double ->number ->time -> date -> date-time -> character

3. 将每列字符串解析为相应类型的向量

当发现 readr 自动解析碰到问题时

可用

problems()查看读入时遇到的问题根据需要设定

read_*()的相关参数,如na = "--"直接设定

read_*()的参数col_types = cols(...)将全部数据读入为字符型变量,然后再用

type_convert()转换变量类型在后续的数据整理步骤中进行相应的处理(如

dplyr::muate() + parse_*())

>> 读入文本文档:readr 包

# 设定缺失值参数read_csv("data/ex_flights.csv", na = "--") %>% spec()#> cols(#> month = col_double(),#> day = col_double(),#> dep_time = col_double(),#> dep_delay = col_logical(),#> tailnum = col_character(),#> time_hour = col_datetime(format = "")#> )# 直接设定参数col_types,?colsex_flights <- read_csv( "data/ex_flights.csv", col_types = cols( # cols_only() dep_delay = col_logical(), # "l" tailnum = col_character(), # "c" time_hour = col_datetime(), # "T" .default = col_integer() ) # col_types = "iiilcT")#> Warning: One or more parsing issues, call `problems()` on#> your data frame for details, e.g.:#> dat <- vroom(...)#> problems(dat)>> 读入文本文档:readr 包

# 设定缺失值参数read_csv("data/ex_flights.csv", na = "--") %>% spec()#> cols(#> month = col_double(),#> day = col_double(),#> dep_time = col_double(),#> dep_delay = col_logical(),#> tailnum = col_character(),#> time_hour = col_datetime(format = "")#> )# 直接设定参数col_types,?colsex_flights <- read_csv( "data/ex_flights.csv", col_types = cols( # cols_only() dep_delay = col_logical(), # "l" tailnum = col_character(), # "c" time_hour = col_datetime(), # "T" .default = col_integer() ) # col_types = "iiilcT")#> Warning: One or more parsing issues, call `problems()` on#> your data frame for details, e.g.:#> dat <- vroom(...)#> problems(dat)problems(ex_flights)#> # A tibble: 17 × 5#> row col expected actual file #> <int> <int> <chr> <chr> <chr> #> 1 3 1 an integer -- C:/Users/admin/Desktop/QFwR_2023.09/slides/L05_Im…#> 2 3 3 an integer -- C:/Users/admin/Desktop/QFwR_2023.09/slides/L05_Im…#> 3 3 4 1/0/T/F/TRUE/FALSE -- C:/Users/admin/Desktop/QFwR_2023.09/slides/L05_Im…#> # ℹ 14 more rows# 全部读入,再行转换类型read_csv( "data/ex_flights.csv", col_types = cols( .default = col_character() )) %>% type_convert(col_types = "iiilcT")#> # A tibble: 20 × 6#> month day dep_time dep_delay tailnum time_hour #> <int> <int> <int> <lgl> <chr> <dttm> #> 1 6 26 932 TRUE -- 2013-06-26 12:00:00#> 2 NA 5 NA NA -- 2013-12-05 17:00:00#> 3 7 20 656 FALSE N17245 2013-07-20 10:00:00#> # ℹ 17 more rows>> 读入文本文档:readr 包

一次性读入多个相同性质的文档

# 生成相同性质的示例文档nycflights13::flights %>% split(.$carrier) %>% purrr::iwalk(~ write_tsv(.x, glue::glue("data/flights_{.y}.tsv")))>> 读入文本文档:readr 包

一次性读入多个相同性质的文档

# 生成相同性质的示例文档nycflights13::flights %>% split(.$carrier) %>% purrr::iwalk(~ write_tsv(.x, glue::glue("data/flights_{.y}.tsv")))# 文件路径向量(files <- dir(path = "data/", pattern = "\\.tsv$", full.names = TRUE))#> [1] "data/flights_9E.tsv" "data/flights_AA.tsv" "data/flights_AS.tsv"#> [4] "data/flights_B6.tsv" "data/flights_DL.tsv" "data/flights_EV.tsv"#> [7] "data/flights_F9.tsv" "data/flights_FL.tsv" "data/flights_HA.tsv"#> [10] "data/flights_MQ.tsv" "data/flights_OO.tsv" "data/flights_UA.tsv"#> [13] "data/flights_US.tsv" "data/flights_VX.tsv" "data/flights_WN.tsv"#> [16] "data/flights_YV.tsv"# 一次性读入(并纵向合并为 tibble)read_tsv(files, id = "fpath") # 这里通过设置 id 参数将文件路径存入指定变量fpath中#> # A tibble: 336,776 × 20#> fpath year month day dep_time sched_dep_time dep_delay arr_time sched_arr_time#> <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>#> 1 data/flight… 2013 1 1 810 810 0 1048 1037#> 2 data/flight… 2013 1 1 1451 1500 -9 1634 1636#> 3 data/flight… 2013 1 1 1452 1455 -3 1637 1639#> # ℹ 336,773 more rows#> # ℹ 11 more variables: arr_delay <dbl>, carrier <chr>, flight <dbl>, tailnum <chr>,#> # origin <chr>, dest <chr>, air_time <dbl>, distance <dbl>, hour <dbl>, minute <dbl>,#> # time_hour <dttm>>> 读入文本文档:readr 包

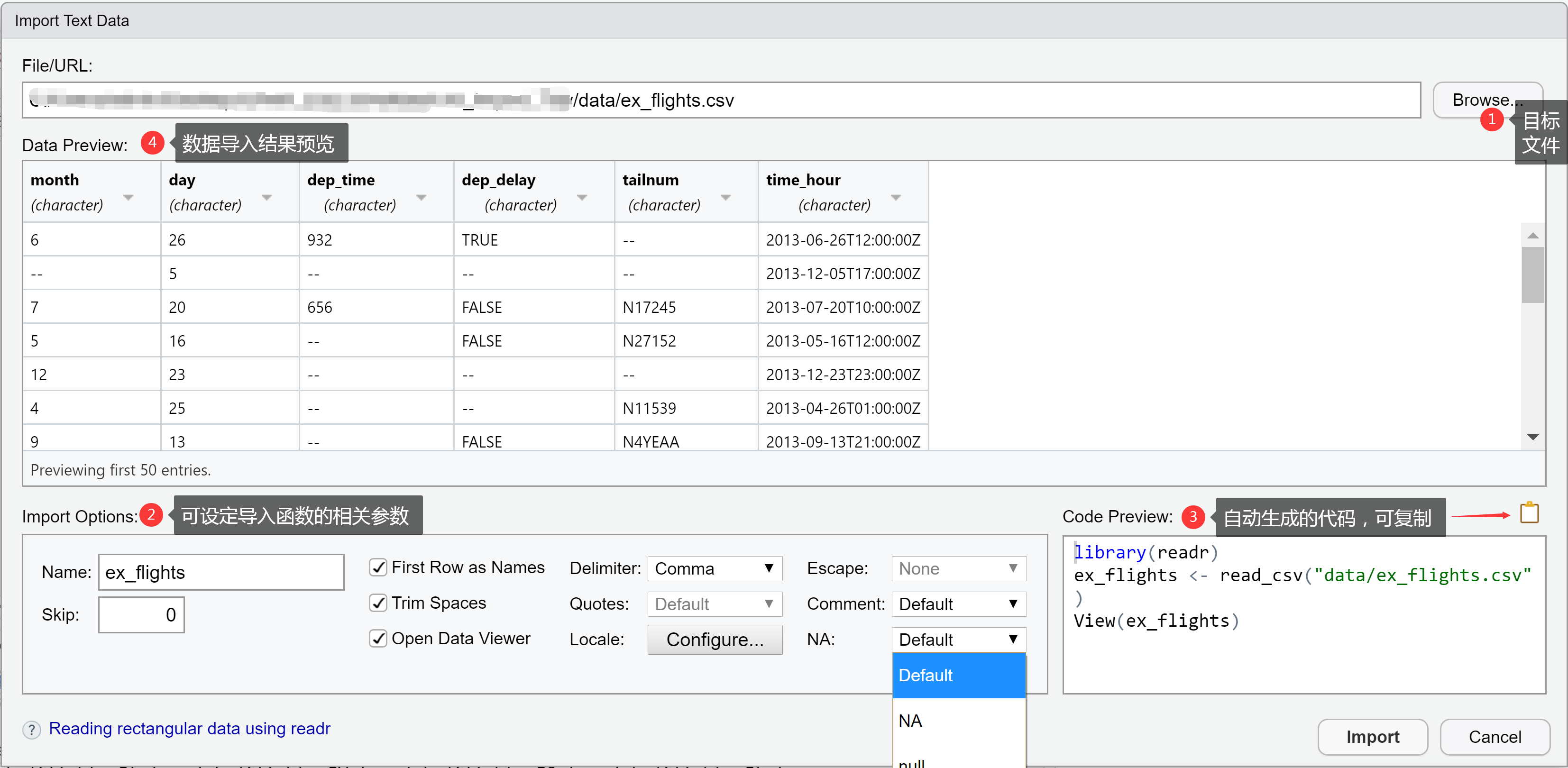

readr 的图形化用户界面 *

* RStudio 右上 Environment 标签页 > Import Dataset > From Text (readr) ...

>> 读入其它类型的数据

>> 读入其它类型的数据

readr::read_rds():Rdata files (.rds)

>> 读入其它类型的数据

readr::read_rds():Rdata files (.rds)

{{: Read and Write Rectangular Text Data Quicklyvroom}} {{

{{readxl}}: excel files (.xls and .xlsx) <- take a 🧐 at it! {{

{{haven}}: SPSS, Stata, and SAS files {{

{{arrow}}: Apache Arrow

>> 读入其它类型的数据

readr::read_rds():Rdata files (.rds)

{{: Read and Write Rectangular Text Data Quicklyvroom}} {{

{{readxl}}: excel files (.xls and .xlsx) <- take a 🧐 at it! {{

{{haven}}: SPSS, Stata, and SAS files {{

{{arrow}}: Apache Arrow

>> 读入其它类型的数据

readr::read_rds():Rdata files (.rds)

{{: Read and Write Rectangular Text Data Quicklyvroom}} {{

{{readxl}}: excel files (.xls and .xlsx) <- take a 🧐 at it! {{

{{haven}}: SPSS, Stata, and SAS files {{

{{arrow}}: Apache Arrow

- {{其它}}: text、network、spatial、genome、image 等类型的数据

2. 数据齐整

(Tidy Data)

>> 齐整数据

齐整数据的三个条件 📐

每列都是一个变量(Every column is a variable.)

每行都是一个观测(Every row is an observation.)

每格都是一个取值(Every cell is a single value.)

>> 齐整数据

齐整数据的三个条件 📐

每列都是一个变量(Every column is a variable.)

每行都是一个观测(Every row is an observation.)

每格都是一个取值(Every cell is a single value.)

>> 齐整数据

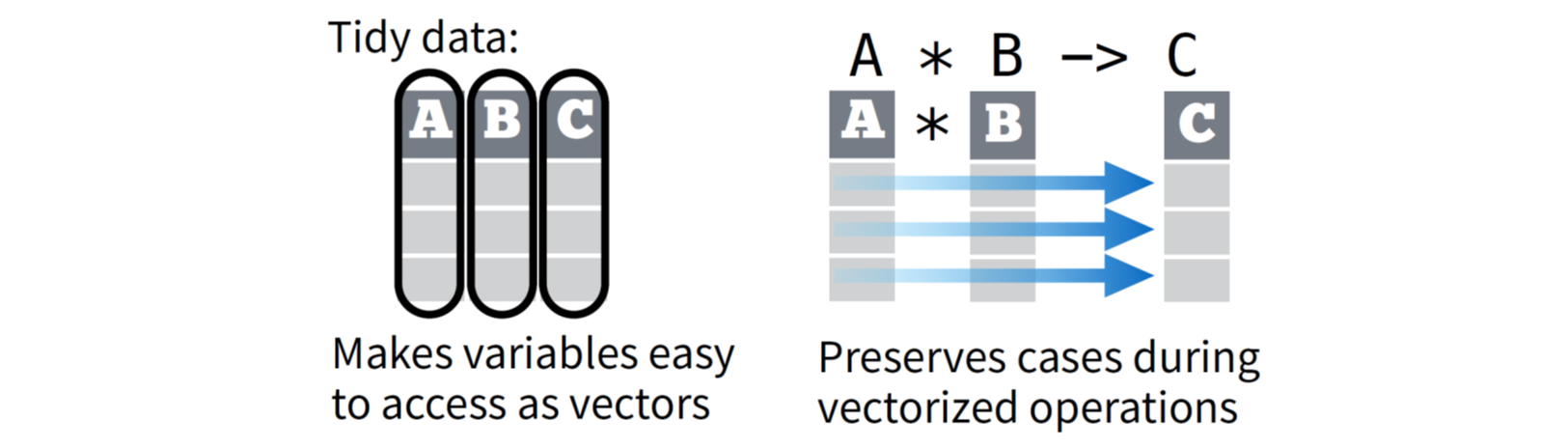

齐整数据的好处 💪

☑ 齐整数据按照逻辑一致的方式存储数据,这让你更容易学习并掌握相关工具对数据进行处理

☑ “每列都是一个变量”及“每行都是一个观测”有助于发挥 R 语言向量化操作的优势

☑ tidyverse 中的 R 包(如 dplyr 、ggplot2 等)在设计上要求输入数据为齐整数据

>> 齐整数据

但……并非所有的数据集都是齐整的,😭

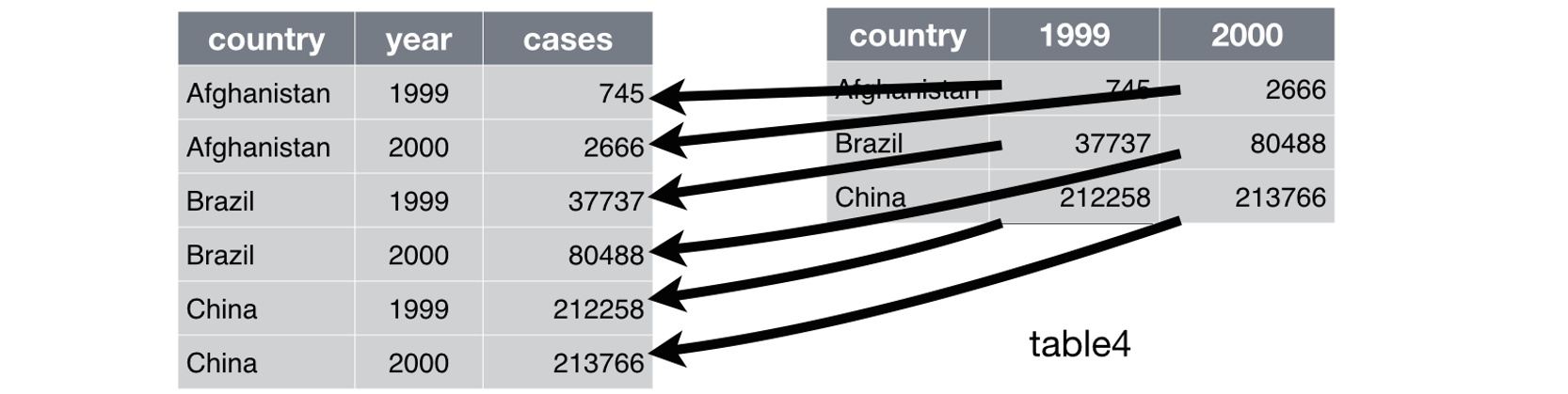

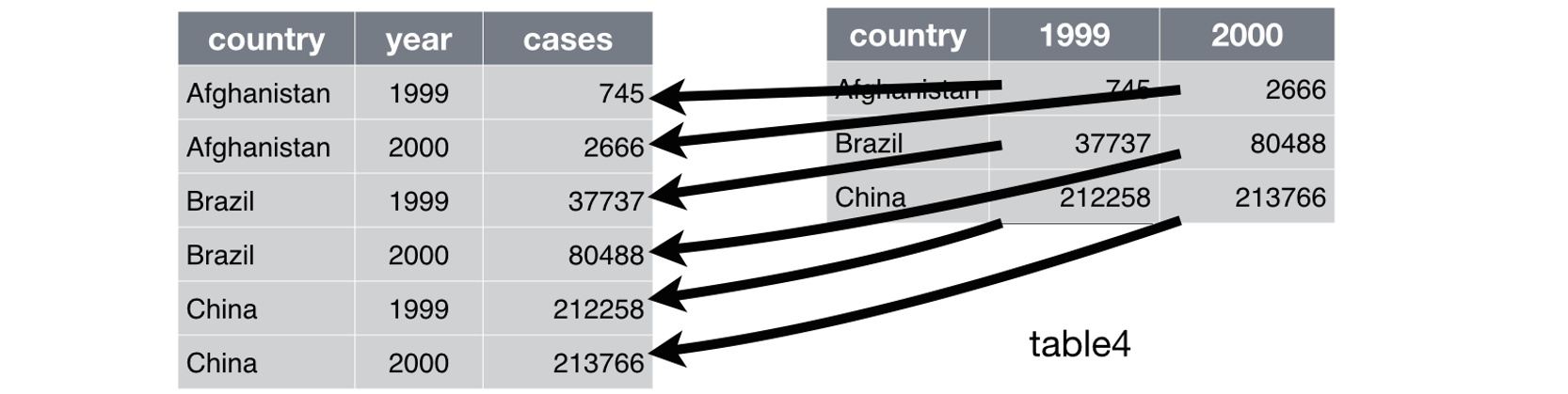

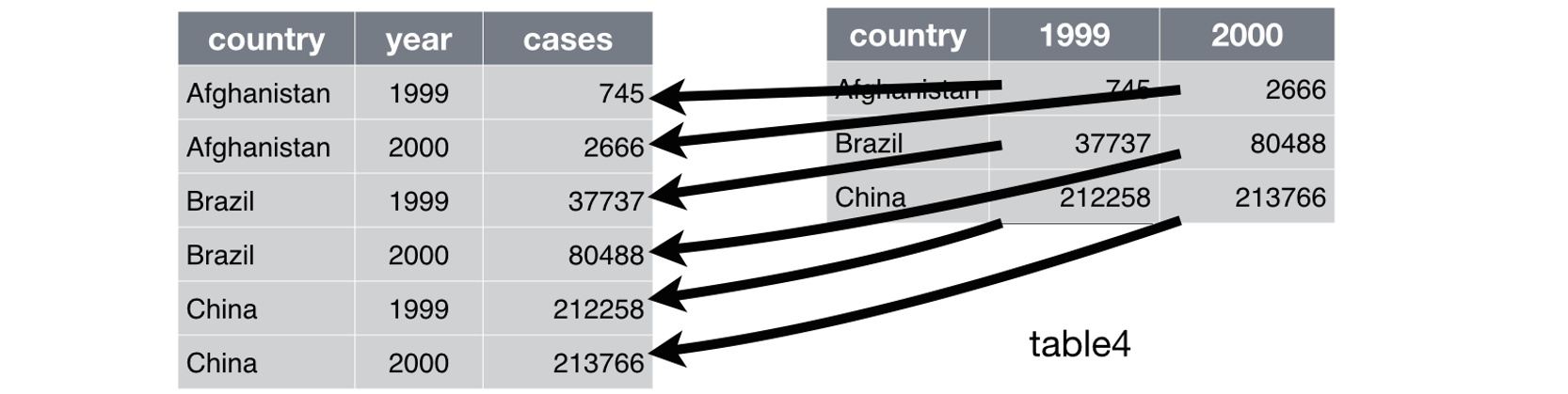

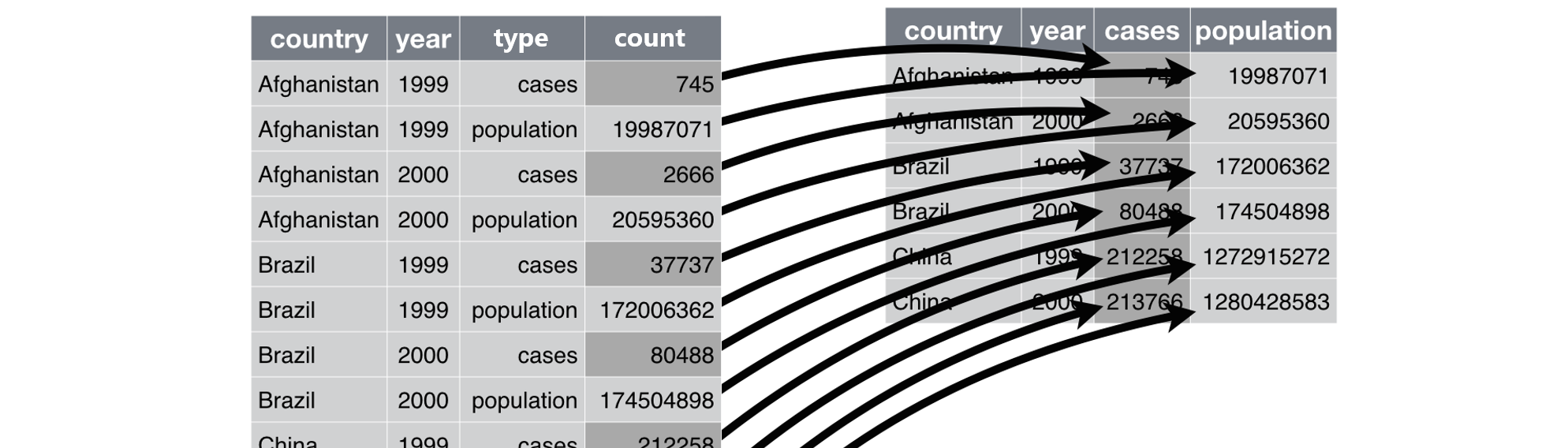

table1#> # A tibble: 6 × 4#> country year cases population#> <chr> <dbl> <dbl> <dbl>#> 1 Afghanistan 1999 745 19987071#> 2 Afghanistan 2000 2666 20595360#> 3 Brazil 1999 37737 172006362#> # ℹ 3 more rowstable2#> # A tibble: 12 × 4#> country year type count#> <chr> <dbl> <chr> <dbl>#> 1 Afghanistan 1999 cases 745#> 2 Afghanistan 1999 population 19987071#> 3 Afghanistan 2000 cases 2666#> # ℹ 9 more rowstable3#> # A tibble: 6 × 3#> country year rate #> <chr> <dbl> <chr> #> 1 Afghanistan 1999 745/19987071 #> 2 Afghanistan 2000 2666/20595360 #> 3 Brazil 1999 37737/172006362#> # ℹ 3 more rowstable4a # cases#> # A tibble: 3 × 3#> country `1999` `2000`#> <chr> <dbl> <dbl>#> 1 Afghanistan 745 2666#> 2 Brazil 37737 80488#> 3 China 212258 213766table4b # population#> # A tibble: 3 × 3#> country `1999` `2000`#> <chr> <dbl> <dbl>#> 1 Afghanistan 19987071 20595360#> 2 Brazil 172006362 174504898#> 3 China 1272915272 1280428583>> 该 tidyr 包出场啦 🎉

tidyr包中的函数大致可分为5大类:

数据长型-宽型转换(pivoting):

pivot_longer()和pivot_wider()分解或合并字符型变量:

separate_longer_delim()|*_position()、separate_wider_delim()|*_position|*_regex()和unite()将深度嵌套的列表数据表格化(rectangling):

unnest_longer()、unnest_wider()、unnest_auto()、unnest()和hoist()缺失值处理:->

其它函数:

chop()|unchop();pack()|unpack();uncount();nest()

>> 该 tidyr 包出场啦 🎉

tidyr包中的函数大致可分为5大类:

数据长型-宽型转换(pivoting):

pivot_longer()和pivot_wider()分解或合并字符型变量:

separate_longer_delim()|*_position()、separate_wider_delim()|*_position|*_regex()和unite()将深度嵌套的列表数据表格化(rectangling):

unnest_longer()、unnest_wider()、unnest_auto()、unnest()和hoist()缺失值处理:->

其它函数:

chop()|unchop();pack()|unpack();uncount();nest()

complete(data, ..., fill = list(), explicit = TRUE): Complete a data frame with missing combinations of dataexpand(data, ...),crossing(...),nesting(...): Expand data frame to include all combinations of valuesexpand_grid(...): Create a tibble from all combinations of the inputted name-value pairsfull_seq(x, period, tol = 1e-06): Create the full sequence of values in a vector

drop_na(data, ...): Drop rows containing missing valuesfill(data, ..., .direction = c("down", "up", "downup", "updown")): Fill in missing values with previous or next valuereplace_na(data, replace, ...): Replace missing values with specified values

>> pivot_longer() 和 pivot_wider()

>> pivot_longer() 和 pivot_wider()

pivot_longer( data, cols, ..., cols_vary = "fastest", names_to = "name", names_prefix = NULL, names_sep = NULL, names_pattern = NULL, names_ptypes = NULL, names_transform = NULL, names_repair = "check_unique", values_to = "value", values_drop_na = FALSE, values_ptypes = NULL, values_transform = NULL)>> pivot_longer() 和 pivot_wider()

pivot_longer( data, cols, ..., cols_vary = "fastest", names_to = "name", names_prefix = NULL, names_sep = NULL, names_pattern = NULL, names_ptypes = NULL, names_transform = NULL, names_repair = "check_unique", values_to = "value", values_drop_na = FALSE, values_ptypes = NULL, values_transform = NULL)table4a %>% pivot_longer( cols = c(`1999`, `2000`), names_to = "year", values_to = "cases" )# table4b %>% ...#> # A tibble: 6 × 3#> country year cases#> <chr> <chr> <dbl>#> 1 Afghanistan 1999 745#> 2 Afghanistan 2000 2666#> 3 Brazil 1999 37737#> # ℹ 3 more rows>> pivot_longer() 和 pivot_wider()

>> pivot_longer() 和 pivot_wider()

pivot_wider( data, ..., id_cols = NULL, id_expand = FALSE, names_from = name, names_prefix = "", names_sep = "_", names_glue = NULL, names_sort = FALSE, names_vary = "fastest", names_expand = FALSE, names_repair = "check_unique", values_from = value, values_fill = NULL, values_fn = NULL, unused_fn = NULL)>> pivot_longer() 和 pivot_wider()

pivot_wider( data, ..., id_cols = NULL, id_expand = FALSE, names_from = name, names_prefix = "", names_sep = "_", names_glue = NULL, names_sort = FALSE, names_vary = "fastest", names_expand = FALSE, names_repair = "check_unique", values_from = value, values_fill = NULL, values_fn = NULL, unused_fn = NULL)table2 %>% pivot_wider( names_from = type, values_from = count )#> # A tibble: 6 × 4#> country year cases population#> <chr> <dbl> <dbl> <dbl>#> 1 Afghanistan 1999 745 19987071#> 2 Afghanistan 2000 2666 20595360#> 3 Brazil 1999 37737 172006362#> # ℹ 3 more rows>> separate_wider_*()、separate_longer_*() 和 unite()

>> separate_wider_*()、separate_longer_*() 和 unite()

separate_wider_delim|position|regex( data, cols, delim | widths | patterns, ..., names, names_sep = NULL, names_repair, too_few, too_many, cols_remove = TRUE)separate_longer_delim|position( data, cols, delim | width, ..., keep_empty = FALSE)>> separate_wider_*()、separate_longer_*() 和 unite()

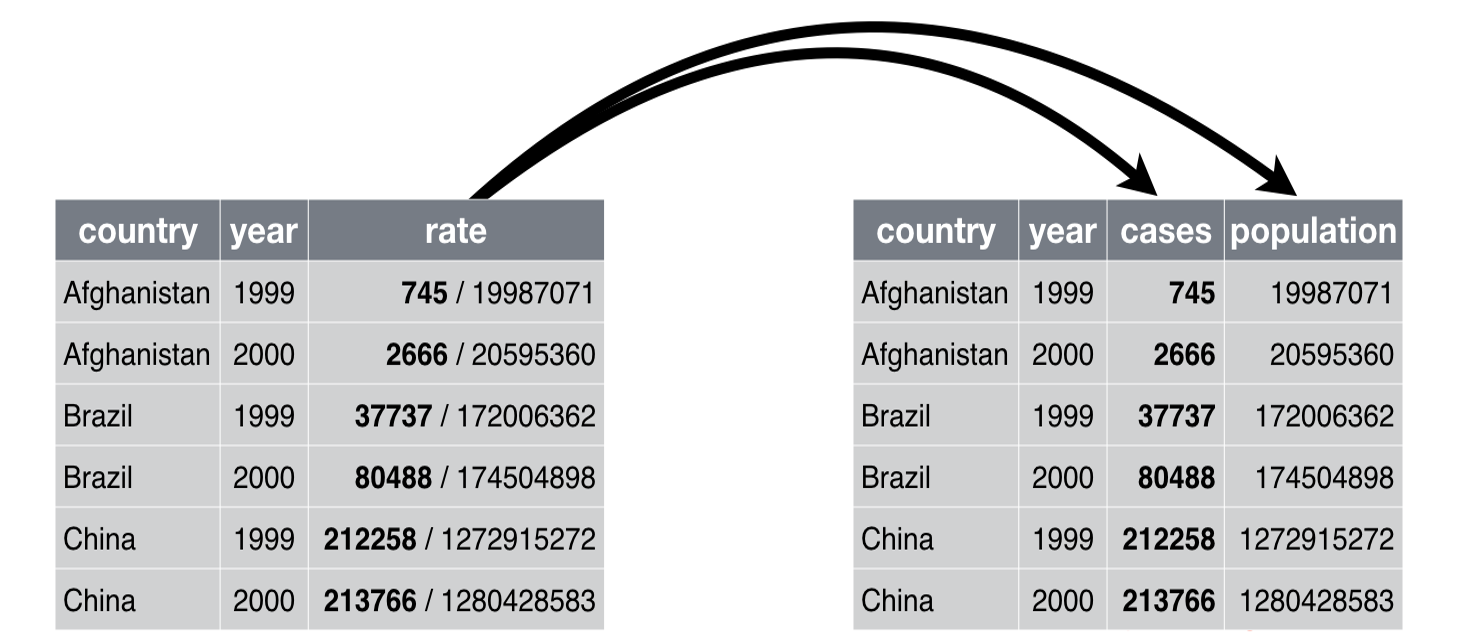

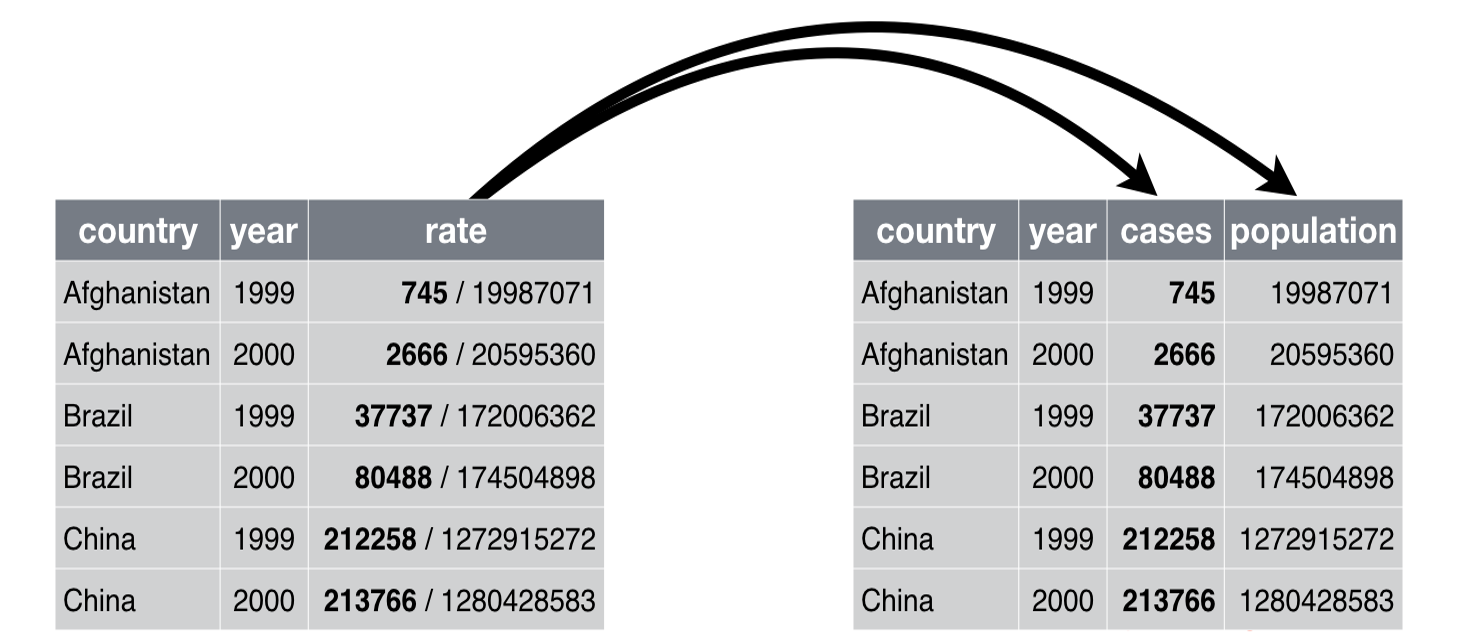

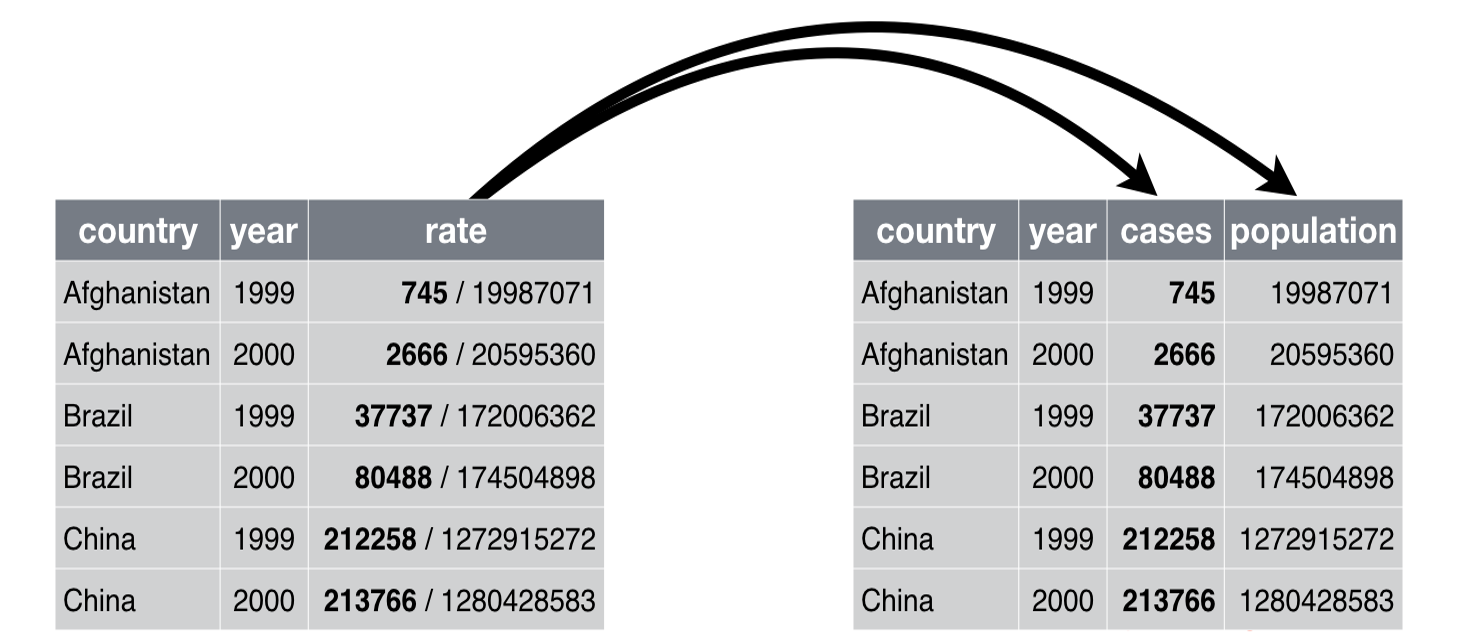

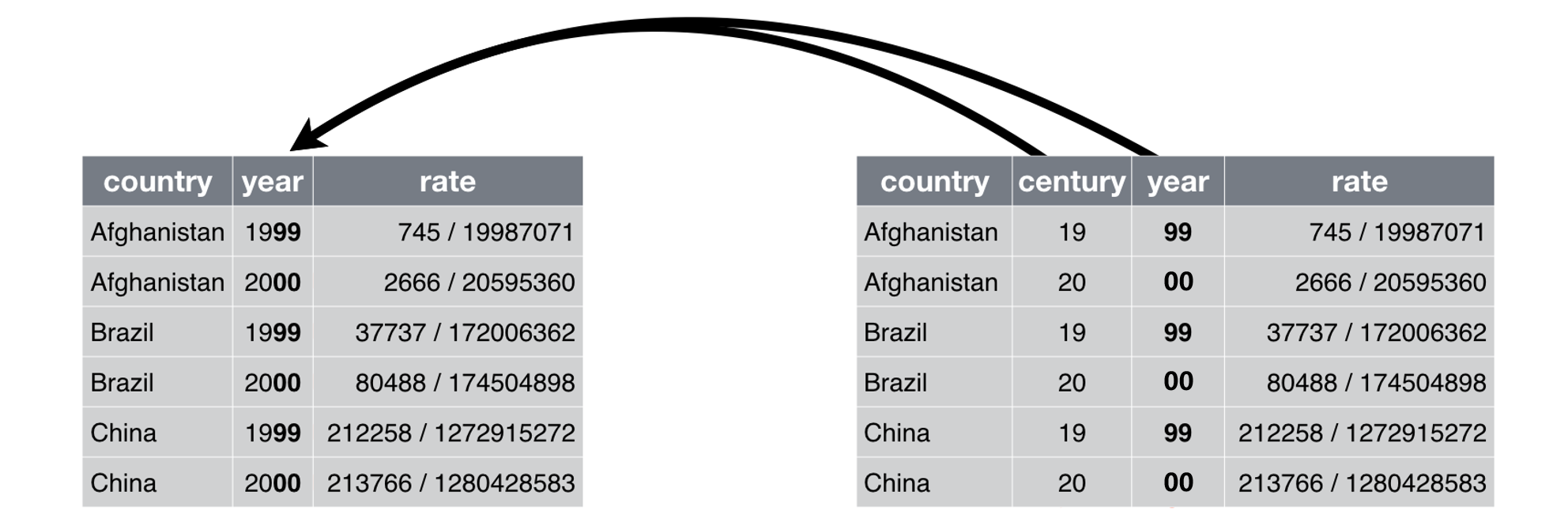

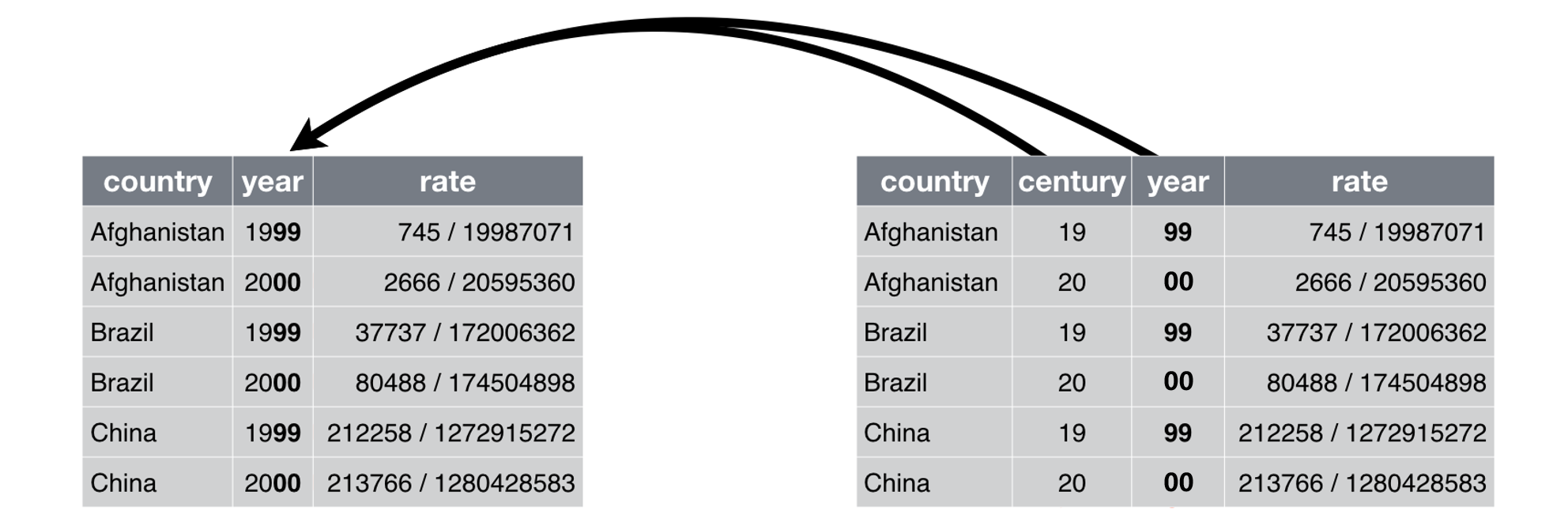

separate_wider_delim|position|regex( data, cols, delim | widths | patterns, ..., names, names_sep = NULL, names_repair, too_few, too_many, cols_remove = TRUE)separate_longer_delim|position( data, cols, delim | width, ..., keep_empty = FALSE)table3 %>% separate_wider_delim( rate, delim = "/", names = c("cases", "population") )#> # A tibble: 6 × 4#> country year cases population#> <chr> <dbl> <chr> <chr> #> 1 Afghanistan 1999 745 19987071 #> 2 Afghanistan 2000 2666 20595360 #> 3 Brazil 1999 37737 172006362 #> # ℹ 3 more rows>> separate_wider_*()、separate_longer_*() 和 unite()

>> separate_wider_*()、separate_longer_*() 和 unite()

unite( data, col, ..., sep = "_", remove = TRUE, na.rm = FALSE)>> separate_wider_*()、separate_longer_*() 和 unite()

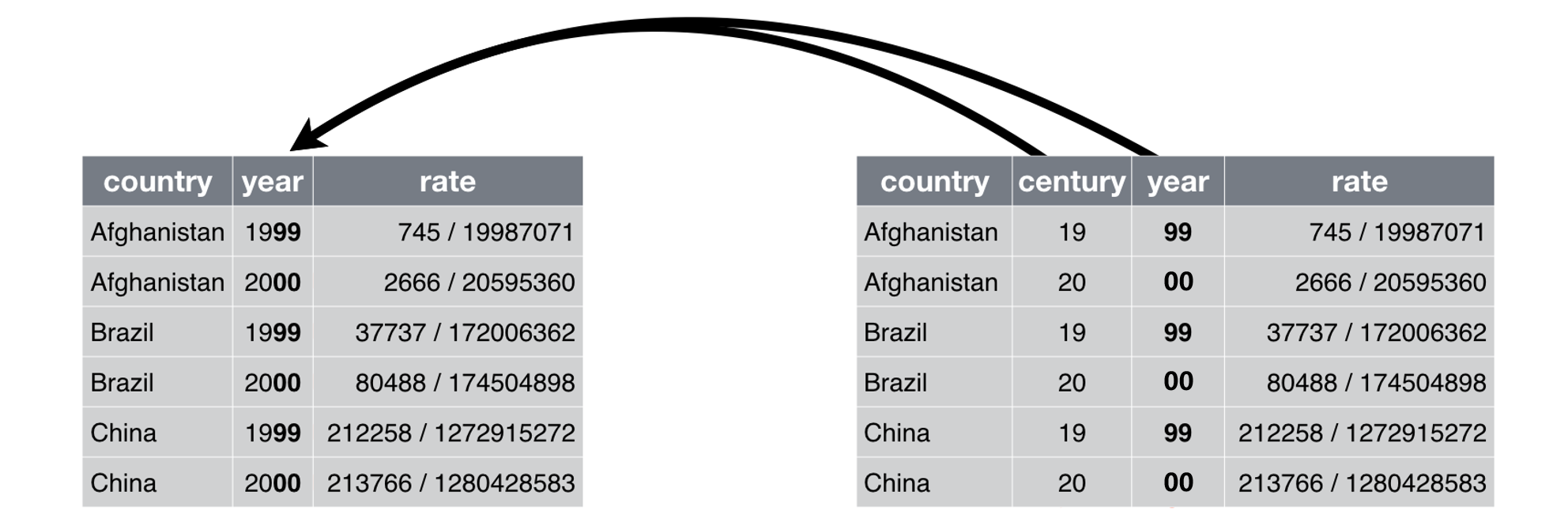

unite( data, col, ..., sep = "_", remove = TRUE, na.rm = FALSE)table5 %>% unite( col = "year", century, year, sep = "" )#> # A tibble: 6 × 3#> country year rate #> <chr> <chr> <chr> #> 1 Afghanistan 1999 745/19987071 #> 2 Afghanistan 2000 2666/20595360 #> 3 Brazil 1999 37737/172006362#> # ℹ 3 more rows