量化金融与金融编程

L10 tidyquant & Friends

曾永艺

厦门大学管理学院

2023-12-15

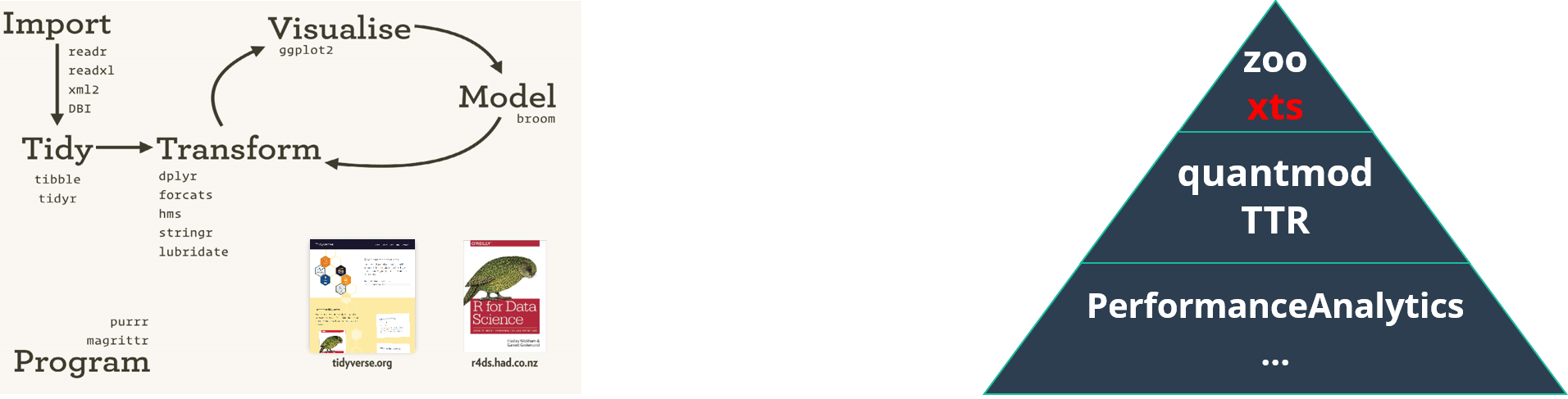

强大且通用的数据处理工具

分组运算

支持异质数据

代码可读性 > 运行性能

强大且通用的数据处理工具

分组运算

支持异质数据

代码可读性 > 运行性能

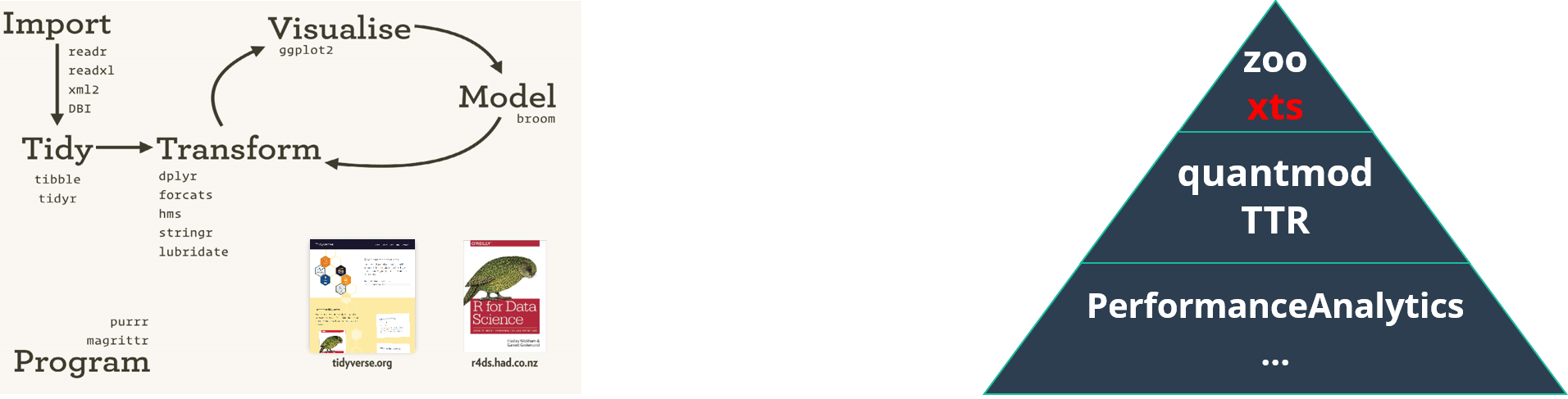

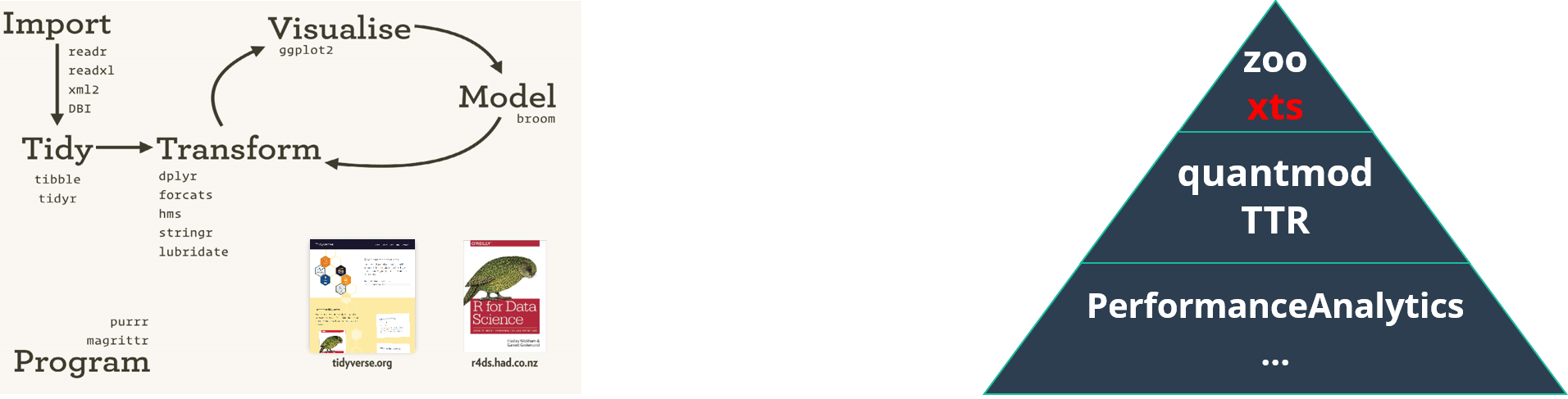

原生支持时间索引

专用且快速的基于时间的

数据处理同质数据(矩阵)

众多金融分析工具

强大且通用的数据处理工具

分组运算

支持异质数据

代码可读性 > 运行性能

原生支持时间索引

专用且快速的基于时间的

数据处理同质数据(矩阵)

众多金融分析工具

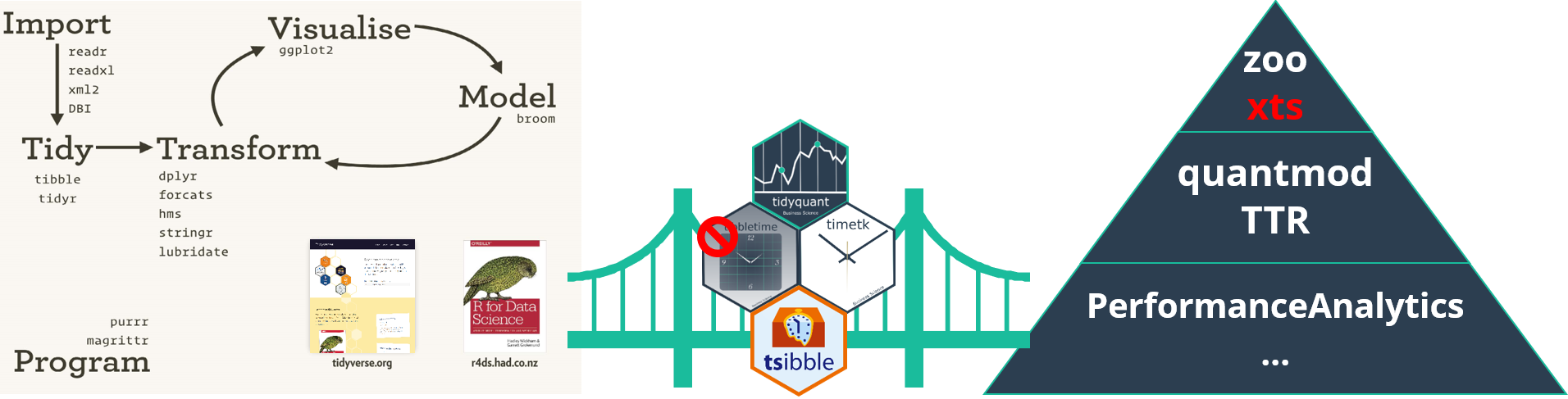

1. tidyquant 包 v1.0.7

Tidy Quantitative Financial Analysis

>> tidyquant 包

library(tidyverse)library(tidyquant) # install.packages("tidyquant")# tidyquant包会自动载入 lubridate, PerformanceAnalytics -> xts -> zoo, # quantmod -> xts / zoo / TTR 等包>> tidyquant 包

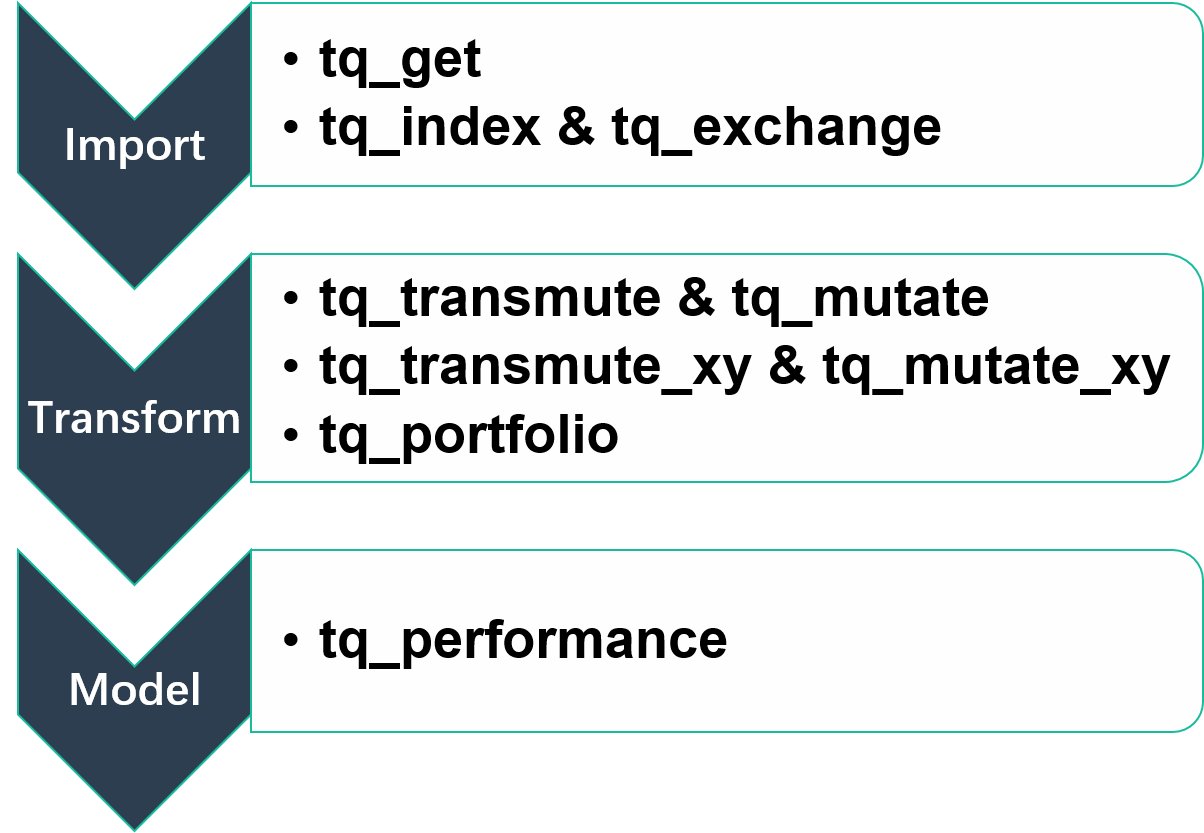

library(tidyverse)library(tidyquant) # install.packages("tidyquant")# tidyquant包会自动载入 lubridate, PerformanceAnalytics -> xts -> zoo, # quantmod -> xts / zoo / TTR 等包核心函数 👉

>> tidyquant:导入数据

# ?riingo::riingo_set_token # 按照 Details 的说明完成设置 # 需到 https://www.tiingo.com/ 注册账号获取令牌set.seed(123456)(SP500_sample <- tq_index("SP500") %>% # source: www.ssga.com slice_sample(n = 10) %>% pull("symbol") %>% tq_get( get = "tiingo", # ~~ get = "stock.prices" # yahoo ~~ from = "2018-12-31", to = "2022-12-31", complete_cases = TRUE # default )) # tq_*() returns data in tibble!#> # A tibble: 10,090 × 14#> symbol date open high low close volume adjusted adjHigh adjLow#> <chr> <dttm> <dbl> <dbl> <dbl> <dbl> <int> <dbl> <dbl> <dbl>#> 1 GE 2018-12-31 00:00:00 7.52 7.62 7.35 7.57 108361489 44.6 44.9 43.3#> 2 GE 2019-01-02 00:00:00 7.46 8.18 7.41 8.05 129354094 47.5 48.2 43.7#> 3 GE 2019-01-03 00:00:00 8.02 8.2 7.78 8.06 122949016 47.5 48.3 45.9#> # ℹ 10,087 more rows#> # ℹ 4 more variables: adjOpen <dbl>, adjVolume <int>, divCash <dbl>, splitFactor <dbl>>> tidyquant:导入数据

# tq_index() returns the stock symbol, company name, weight and sector# of every stock in an index. The source is www.ssga.com.tq_index_options()#> [1] "DOW" "DOWGLOBAL" "SP400" "SP500" "SP600"# tq_exchange() returns the stock symbol, company, last sale price, # market capitalization, sector and industry of every stock in an exchange. # The source is www.nasdaq.com.tq_exchange_options()#> [1] "AMEX" "NASDAQ" "NYSE"# tq_get() is a consolidated function that gets data from various web sources.tq_get_options()#> [1] "stock.prices" "stock.prices.japan" "dividends" "splits" #> [5] "economic.data" "quandl" "quandl.datatable" "tiingo" #> [9] "tiingo.iex" "tiingo.crypto" "alphavantager" "alphavantage" #> [13] "rblpapi">> tidyquant:数据转化

(SP500_ret <- SP500_sample %>% group_by(symbol) %>% tq_transmute( select = adjusted, # the columns passed to the mutate_fun mutate_fun = periodReturn, # mutation function col_rename = "dr", # a character vector to rename columns # ...: parameters passed to the mutate_fun -> period = "daily", type = "log", leading = FALSE ))#> # A tibble: 10,090 × 3#> # Groups: symbol [10]#> symbol date dr#> <chr> <dttm> <dbl>#> 1 GE 2018-12-31 00:00:00 NA #> 2 GE 2019-01-02 00:00:00 0.0615 #> 3 GE 2019-01-03 00:00:00 0.00124#> # ℹ 10,087 more rows>> tidyquant:数据转化

tq_mutate_fun_options()[c("xts", "zoo", "quantmod")] # 27 + 14 + 25#> $xts#> [1] "apply.daily" "apply.monthly" "apply.quarterly" "apply.weekly" #> [5] "apply.yearly" "diff.xts" "lag.xts" "period.apply" #> [9] "period.max" "period.min" "period.prod" "period.sum" #> [13] "periodicity" "to.daily" "to.hourly" "to.minutes" #> [17] "to.minutes10" "to.minutes15" "to.minutes3" "to.minutes30" #> [21] "to.minutes5" "to.monthly" "to.period" "to.quarterly" #> [25] "to.weekly" "to.yearly" "to_period" #> #> $zoo#> [1] "rollapply" "rollapplyr" "rollmax" "rollmax.default" #> [5] "rollmaxr" "rollmean" "rollmean.default" "rollmeanr" #> [9] "rollmedian" "rollmedian.default" "rollmedianr" "rollsum" #> [13] "rollsum.default" "rollsumr" #> #> $quantmod#> [1] "allReturns" "annualReturn" "ClCl" "dailyReturn" #> [5] "Delt" "HiCl" "Lag" "LoCl" #> [9] "LoHi" "monthlyReturn" "Next" "OpCl" #> [13] "OpHi" "OpLo" "OpOp" "periodReturn" #> [17] "quarterlyReturn" "seriesAccel" "seriesDecel" "seriesDecr" #> [21] "seriesHi" "seriesIncr" "seriesLo" "weeklyReturn" #> [25] "yearlyReturn">> tidyquant:数据转化

tq_mutate_fun_options()[c("TTR", "PerformanceAnalytics")] # 64 + 7#> $TTR#> [1] "adjRatios" "ADX" "ALMA" "aroon" #> [5] "ATR" "BBands" "CCI" "chaikinAD" #> [9] "chaikinVolatility" "CLV" "CMF" "CMO" #> [13] "CTI" "DEMA" "DonchianChannel" "DPO" #> [17] "DVI" "EMA" "EMV" "EVWMA" #> [21] "GMMA" "growth" "HMA" "keltnerChannels" #> [25] "KST" "lags" "MACD" "MFI" #> [29] "momentum" "OBV" "PBands" "ROC" #> [33] "rollSFM" "RSI" "runCor" "runCov" #> [37] "runMAD" "runMax" "runMean" "runMedian" #> [41] "runMin" "runPercentRank" "runSD" "runSum" #> [45] "runVar" "SAR" "SMA" "SMI" #> [49] "SNR" "stoch" "TDI" "TR" #> [53] "TRIX" "ultimateOscillator" "VHF" "volatility" #> [57] "VWAP" "VWMA" "wilderSum" "williamsAD" #> [61] "WMA" "WPR" "ZigZag" "ZLEMA" #> #> $PerformanceAnalytics#> [1] "Return.annualized" "Return.annualized.excess" "Return.clean" #> [4] "Return.cumulative" "Return.excess" "Return.Geltner" #> [7] "zerofill">> tidyquant:数据分析

# use mutate() or summarise()SP500_ret %>% filter(!is.na(dr)) %>% summarise( mean_ret = mean(dr), sd_ret = sd(dr), n_ret = n() ) %>% print(n = 10)#> # A tibble: 10 × 4#> symbol mean_ret sd_ret n_ret#> <chr> <dbl> <dbl> <int>#> 1 CMG 0.00116 0.0234 1008#> 2 COO 0.000260 0.0190 1008#> 3 EXC 0.000425 0.0190 1008#> 4 FIS -0.000354 0.0234 1008#> 5 FTNT 0.00123 0.0286 1008#> 6 GE 0.000377 0.0280 1008#> 7 ODFL 0.00124 0.0217 1008#> 8 PPG 0.000273 0.0202 1008#> 9 TEL 0.000485 0.0212 1008#> 10 WFC 0.00000548 0.0253 1008>> tidyquant:数据分析

# use mutate() or summarise()SP500_ret %>% filter(!is.na(dr)) %>% summarise( mean_ret = mean(dr), sd_ret = sd(dr), n_ret = n() ) %>% print(n = 10)#> # A tibble: 10 × 4#> symbol mean_ret sd_ret n_ret#> <chr> <dbl> <dbl> <int>#> 1 CMG 0.00116 0.0234 1008#> 2 COO 0.000260 0.0190 1008#> 3 EXC 0.000425 0.0190 1008#> 4 FIS -0.000354 0.0234 1008#> 5 FTNT 0.00123 0.0286 1008#> 6 GE 0.000377 0.0280 1008#> 7 ODFL 0.00124 0.0217 1008#> 8 PPG 0.000273 0.0202 1008#> 9 TEL 0.000485 0.0212 1008#> 10 WFC 0.00000548 0.0253 1008# use tq_performance()SP500_ret %>% tq_performance( Ra = dr, # Rb = NULL, performance_fun = table.AnnualizedReturns, geometric = FALSE # ... )#> # A tibble: 10 × 4#> # Groups: symbol [10]#> symbol AnnualizedReturn#> <chr> <dbl>#> 1 GE 0.095 #> 2 PPG 0.0688#> 3 WFC 0.0014#> 4 COO 0.0656#> # ℹ 6 more rows#> # ℹ 2 more variables:#> # `AnnualizedSharpe(Rf=0%)` <dbl>,#> # AnnualizedStdDev <dbl>>> tidyquant:数据分析

tq_performance_fun_options()[1:4] # 19 + 13 + 7 + 9#> $table.funs#> [1] "table.AnnualizedReturns" "table.Arbitrary" "table.Autocorrelation" #> [4] "table.CAPM" "table.CaptureRatios" "table.Correlation" #> [7] "table.Distributions" "table.DownsideRisk" "table.DownsideRiskRatio"#> [10] "table.DrawdownsRatio" "table.HigherMoments" "table.InformationRatio" #> [13] "table.RollingPeriods" "table.SFM" "table.SpecificRisk" #> [16] "table.Stats" "table.TrailingPeriods" "table.UpDownRatios" #> [19] "table.Variability" #> #> $CAPM.funs#> [1] "CAPM.alpha" "CAPM.beta" "CAPM.beta.bear" "CAPM.beta.bull" #> [5] "CAPM.CML" "CAPM.CML.slope" "CAPM.dynamic" "CAPM.epsilon" #> [9] "CAPM.jensenAlpha" "CAPM.RiskPremium" "CAPM.SML.slope" "TimingRatio" #> [13] "MarketTiming" #> #> $SFM.funs#> [1] "SFM.alpha" "SFM.beta" "SFM.CML" "SFM.CML.slope" #> [5] "SFM.dynamic" "SFM.epsilon" "SFM.jensenAlpha"#> #> $descriptive.funs#> [1] "mean" "sd" "min" "max" "cor" #> [6] "mean.geometric" "mean.stderr" "mean.LCL" "mean.UCL">> tidyquant:数据分析

tq_performance_fun_options()[5:9] # 4 + 5 + 14 + 6 + 6#> $annualized.funs#> [1] "Return.annualized" "Return.annualized.excess" "sd.annualized" #> [4] "SharpeRatio.annualized" #> #> $VaR.funs#> [1] "VaR" "ES" "ETL" "CDD" "CVaR"#> #> $moment.funs#> [1] "var" "cov" "skewness" "kurtosis" #> [5] "CoVariance" "CoSkewness" "CoSkewnessMatrix" "CoKurtosis" #> [9] "CoKurtosisMatrix" "M3.MM" "M4.MM" "BetaCoVariance" #> [13] "BetaCoSkewness" "BetaCoKurtosis" #> #> $drawdown.funs#> [1] "AverageDrawdown" "AverageLength" "AverageRecovery" "DrawdownDeviation"#> [5] "DrawdownPeak" "maxDrawdown" #> #> $Bacon.risk.funs#> [1] "MeanAbsoluteDeviation" "Frequency" "SharpeRatio" #> [4] "MSquared" "MSquaredExcess" "HurstIndex">> tidyquant:数据分析

tq_performance_fun_options()[10:14] # 12 + 4 + 7 + 20 + 3#> $Bacon.regression.funs#> [1] "CAPM.alpha" "CAPM.beta" "CAPM.epsilon" "CAPM.jensenAlpha"#> [5] "SystematicRisk" "SpecificRisk" "TotalRisk" "TreynorRatio" #> [9] "AppraisalRatio" "FamaBeta" "Selectivity" "NetSelectivity" #> #> $Bacon.relative.risk.funs#> [1] "ActivePremium" "ActiveReturn" "TrackingError" "InformationRatio"#> #> $Bacon.drawdown.funs#> [1] "PainIndex" "PainRatio" "CalmarRatio" "SterlingRatio" "BurkeRatio" #> [6] "MartinRatio" "UlcerIndex" #> #> $Bacon.downside.risk.funs#> [1] "DownsideDeviation" "DownsidePotential" "DownsideFrequency" #> [4] "SemiDeviation" "SemiVariance" "UpsideRisk" #> [7] "UpsidePotentialRatio" "UpsideFrequency" "BernardoLedoitRatio" #> [10] "DRatio" "Omega" "OmegaSharpeRatio" #> [13] "OmegaExcessReturn" "SortinoRatio" "M2Sortino" #> [16] "Kappa" "VolatilitySkewness" "AdjustedSharpeRatio" #> [19] "SkewnessKurtosisRatio" "ProspectRatio" #> #> $misc.funs#> [1] "KellyRatio" "Modigliani" "UpDownRatios">> tidyquant:vignettes & {{business science blog}}

browseVignettes(package = 'tidyquant')# tidyquant::TQ00-introduction-to-tidyquant# Introduction to tidyquant# tidyquant::TQ01-core-functions-in-tidyquant# Core Functions in tidyquant# tidyquant::TQ02-quant-integrations-in-tidyquant# R Quantitative Analysis Package Integrations in tidyquant# tidyquant::TQ03-scaling-and-modeling-with-tidyquant# Scaling Your Analysis with tidyquant# tidyquant::TQ04-charting-with-tidyquant# Charting with tidyquant# tidyquant::TQ05-performance-analysis-with-tidyquant# Performance Analysis with tidyquant# tidyquant::TQ06-excel-in-r# Excel in R - tidyquant 1.0.0>> tidyquant:{{r for excel user}}

FANG %>% pivot_table( .row = symbol, .columns = ~ year(date), .values = ~ PCT_CHANGE_FIRSTLAST( adjusted) )#> # A tibble: 4 × 5#> symbol `2013` `2014` `2015` `2016`#> <chr> <dbl> <dbl> <dbl> <dbl>#> 1 AMZN 0.550 -0.220 1.19 0.177 #> 2 FB 0.952 0.426 0.334 0.126 #> 3 GOOG 0.550 -0.0532 0.446 0.0404#> 4 NFLX 3.00 -0.0585 1.29 0.126emmmm,tidyquant::pivot_table() 接口真香!🌹 😍

>> tidyquant:{{r for excel user}}

FANG %>% pivot_table( .row = symbol, .columns = ~ year(date), .values = ~ PCT_CHANGE_FIRSTLAST( adjusted) )#> # A tibble: 4 × 5#> symbol `2013` `2014` `2015` `2016`#> <chr> <dbl> <dbl> <dbl> <dbl>#> 1 AMZN 0.550 -0.220 1.19 0.177 #> 2 FB 0.952 0.426 0.334 0.126 #> 3 GOOG 0.550 -0.0532 0.446 0.0404#> 4 NFLX 3.00 -0.0585 1.29 0.126emmmm,tidyquant::pivot_table() 接口真香!🌹 😍

当然,你也可以选择“呆在净土界”(stay in tidyverse),通过各式函数的管道组合来实现同样的结果!😎 👍

FANG %>% group_by(symbol, yr = year(date)) %>% summarise(ret = last(adjusted) / first(adjusted) - 1, .groups = "drop") %>% pivot_wider(id_cols = symbol, names_from = yr, values_from = ret)#> # A tibble: 4 × 5#> symbol `2013` `2014` `2015` `2016`#> <chr> <dbl> <dbl> <dbl> <dbl>#> 1 AMZN 0.550 -0.220 1.19 0.177 #> 2 FB 0.952 0.426 0.334 0.126 #> 3 GOOG 0.550 -0.0532 0.446 0.0404#> 4 NFLX 3.00 -0.0585 1.29 0.1262. tsibble 包 v1.1.3

Tidy Temporal Data Frames and Tools

Data Infrastructure for {{tidyverts}}

>> tsibble 包

library(tsibble) # install.packages("tsibble")>> tsibble 包

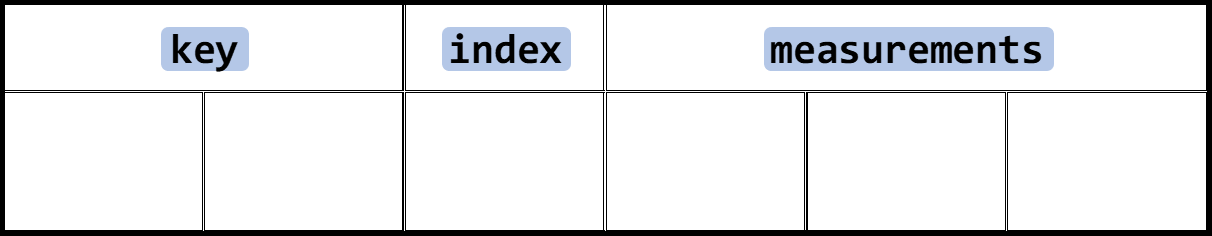

library(tsibble) # install.packages("tsibble")在 tbl_ts 数据结构中

keyis a set of variables that define observational units over time.indexis a variable with inherent ordering from past to present.Each observation should be uniquely identified by

keyandindex.Each observational unit should be measured at a common interval, if regularly spaced.

>> tsibble:as_tsibble() 强制转化为tbl_ts

# 将数据集FANG转化为tbl_tsdata(FANG) # 在tidyquant包中class(FANG) # FANG is a tibble#> [1] "tbl_df" "tbl" "data.frame">> tsibble:as_tsibble() 强制转化为tbl_ts

# 将数据集FANG转化为tbl_tsdata(FANG) # 在tidyquant包中class(FANG) # FANG is a tibble#> [1] "tbl_df" "tbl" "data.frame"FANG <- as_tsibble(FANG, key = symbol, index = date)# (x, key = NULL, index, regular = TRUE, validate = TRUE, .drop = TRUE, ...)class(FANG) # now FANG is a tsibble#> [1] "tbl_ts" "tbl_df" "tbl" "data.frame">> tsibble:as_tsibble() 强制转化为tbl_ts

# 将数据集FANG转化为tbl_tsdata(FANG) # 在tidyquant包中class(FANG) # FANG is a tibble#> [1] "tbl_df" "tbl" "data.frame"FANG <- as_tsibble(FANG, key = symbol, index = date)# (x, key = NULL, index, regular = TRUE, validate = TRUE, .drop = TRUE, ...)class(FANG) # now FANG is a tsibble#> [1] "tbl_ts" "tbl_df" "tbl" "data.frame"FANG#> # A tsibble: 4,032 x 8 [1D]#> # Key: symbol [4]#> symbol date open high low close volume adjusted#> <chr> <date> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>#> 1 AMZN 2013-01-02 256. 258. 253. 257. 3271000 257.#> 2 AMZN 2013-01-03 257. 261. 256. 258. 2750900 258.#> 3 AMZN 2013-01-04 258. 260. 257. 259. 1874200 259.#> # ℹ 4,029 more rows>> tsibble:filter_index() 选取时间子集

(FANG_2016 <- FANG %>% filter_index("2016"))#> # A tsibble: 1,008 x 8 [1D]#> # Key: symbol [4]#> symbol date open high low close volume adjusted#> <chr> <date> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>#> 1 AMZN 2016-01-04 656. 658. 628. 637. 9314500 637.#> 2 AMZN 2016-01-05 647. 647. 628. 634. 5822600 634.#> 3 AMZN 2016-01-06 622 640. 620. 633. 5329200 633.#> # ℹ 1,005 more rowsFANG_2016 %>% filter_index(~ "2016-01-05", "2016-12-29" ~ .)#> # A tsibble: 16 x 8 [1D]#> # Key: symbol [4]#> symbol date open high low close volume adjusted#> <chr> <date> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>#> 1 AMZN 2016-01-04 656. 658. 628. 637. 9314500 637.#> 2 AMZN 2016-01-05 647. 647. 628. 634. 5822600 634.#> 3 AMZN 2016-12-29 772. 773. 761. 765. 3153500 765.#> # ℹ 13 more rows>> tsibble:index_by() + summarise() 修改时间粒度

FANG_2016 %>% index_by()#> # A tsibble: 1,008 x 8 [1D]#> # Key: symbol [4]#> # Groups: @ date [252]#> symbol date open high low#> <chr> <date> <dbl> <dbl> <dbl>#> 1 AMZN 2016-01-04 656. 658. 628.#> 2 AMZN 2016-01-05 647. 647. 628.#> 3 AMZN 2016-01-06 622 640. 620.#> # ℹ 1,005 more rows#> # ℹ 3 more variables: close <dbl>,#> # volume <dbl>, adjusted <dbl>FANG_2016 %>% group_by_key() %>% index_by()#> # A tsibble: 1,008 x 8 [1D]#> # Key: symbol [4]#> # Groups: symbol @ date [1,008]#> symbol date open high low#> <chr> <date> <dbl> <dbl> <dbl>#> 1 AMZN 2016-01-04 656. 658. 628.#> 2 AMZN 2016-01-05 647. 647. 628.#> 3 AMZN 2016-01-06 622 640. 620.#> # ℹ 1,005 more rows#> # ℹ 3 more variables: close <dbl>,#> # volume <dbl>, adjusted <dbl>>> tsibble:index_by() + summarise() 修改时间粒度

FANG_2016 %>% index_by()#> # A tsibble: 1,008 x 8 [1D]#> # Key: symbol [4]#> # Groups: @ date [252]#> symbol date open high low#> <chr> <date> <dbl> <dbl> <dbl>#> 1 AMZN 2016-01-04 656. 658. 628.#> 2 AMZN 2016-01-05 647. 647. 628.#> 3 AMZN 2016-01-06 622 640. 620.#> # ℹ 1,005 more rows#> # ℹ 3 more variables: close <dbl>,#> # volume <dbl>, adjusted <dbl>FANG_2016 %>% group_by_key() %>% index_by()#> # A tsibble: 1,008 x 8 [1D]#> # Key: symbol [4]#> # Groups: symbol @ date [1,008]#> symbol date open high low#> <chr> <date> <dbl> <dbl> <dbl>#> 1 AMZN 2016-01-04 656. 658. 628.#> 2 AMZN 2016-01-05 647. 647. 628.#> 3 AMZN 2016-01-06 622 640. 620.#> # ℹ 1,005 more rows#> # ℹ 3 more variables: close <dbl>,#> # volume <dbl>, adjusted <dbl>FANG_2016 %>% group_by_key() %>% index_by( bimonth = ~ floor_date(., "2 months") ) %>% summarise( HIGH = max(high), LOW = min(low) )#> # A tsibble: 24 x 4 [1D]#> # Key: symbol [4]#> symbol bimonth HIGH LOW#> <chr> <date> <dbl> <dbl>#> 1 AMZN 2016-01-01 658. 474 #> 2 AMZN 2016-03-01 670. 539. #> 3 AMZN 2016-05-01 732. 656 #> 4 AMZN 2016-07-01 775. 717. #> 5 AMZN 2016-09-01 847. 756 #> 6 AMZN 2016-11-01 801. 710. #> 7 FB 2016-01-01 118. 89.4#> # ℹ 17 more rows>> tsibble:*_gaps() 检查、处理时间缺口

FANG_2016 %>% count_gaps()#> # A tibble: 208 × 4#> symbol .from .to .n#> <chr> <date> <date> <int>#> 1 AMZN 2016-01-09 2016-01-10 2#> 2 AMZN 2016-01-16 2016-01-18 3#> 3 AMZN 2016-01-23 2016-01-24 2#> 4 AMZN 2016-01-30 2016-01-31 2#> 5 AMZN 2016-02-06 2016-02-07 2#> 6 AMZN 2016-02-13 2016-02-15 3#> 7 AMZN 2016-02-20 2016-02-21 2#> 8 AMZN 2016-02-27 2016-02-28 2#> 9 AMZN 2016-03-05 2016-03-06 2#> 10 AMZN 2016-03-12 2016-03-13 2#> # ℹ 198 more rows# has_gaps() | scan_gaps() | fill_gaps()3. slider 包 v0.3.1

Sliding Window Functions

>> slider 包

purrr-like Type-stable Window Functions Over Any R Data Type

>> slider 包

purrr-like Type-stable Window Functions Over Any R Data Type

>> slider 包

purrr-like Type Stable Window Functions Over Any R Data Type

| Function | list | *_lgl | *_int | *_dbl | *_chr | *_dfc | *_dfr | *_vec |

|---|---|---|---|---|---|---|---|---|

| slide() | ||||||||

| slide_index() | ||||||||

| slide_period() | ||||||||

| slide2() | ||||||||

| slide_index2() | ||||||||

| slide_period2() | ||||||||

| pslide() | ||||||||

| pslide_index() | ||||||||

| pslide_period() | ||||||||

| [p]hop*() | - | - | - | - | - | - |

>> slide(.x, .f, ..., .before = 0L, .after = 0L, .step = 1L, .complete = FALSE)

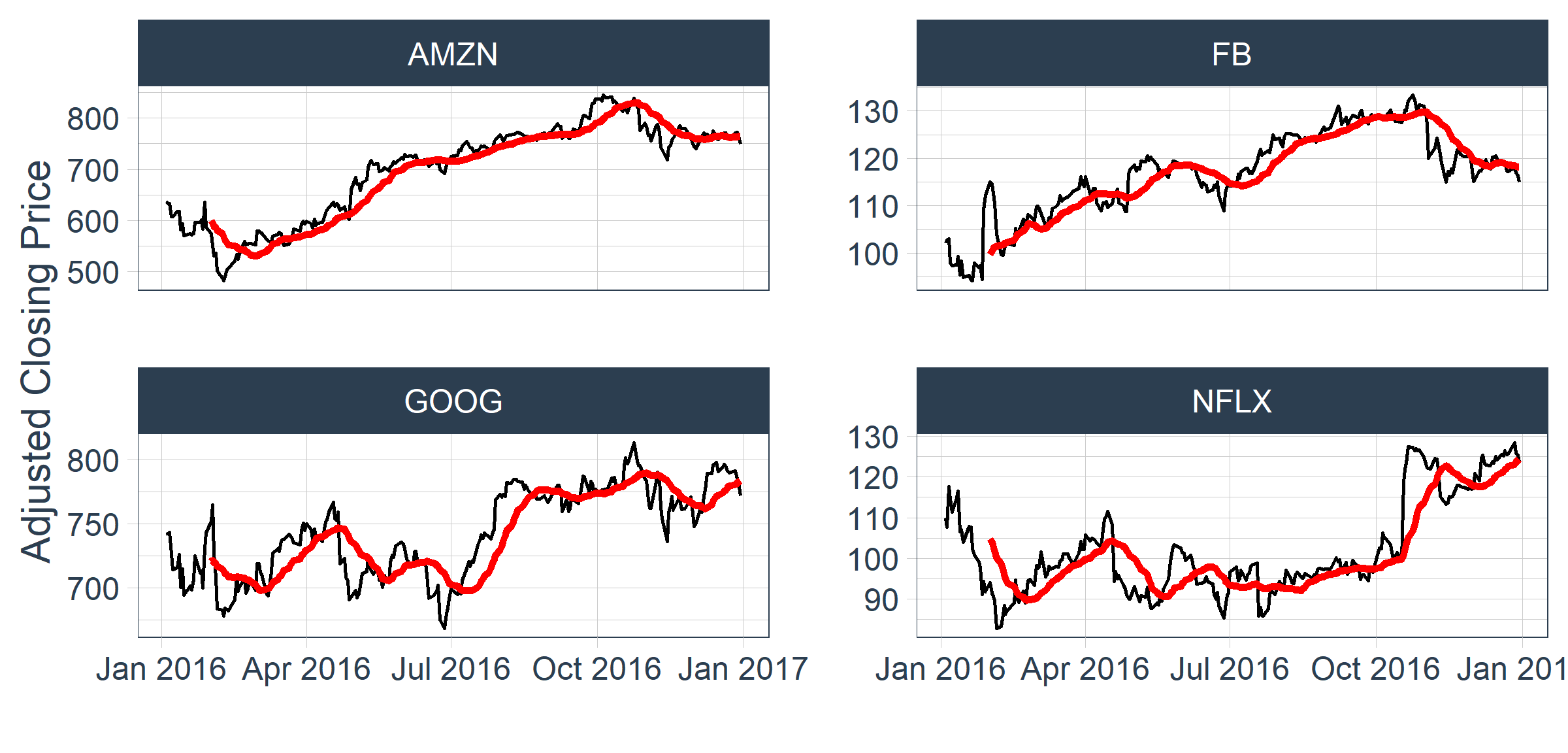

library(slider)FANG_2016 %>% group_by_key() %>% # 等同于group_by(symbol) mutate( ma5 = slide_dbl(adjusted, mean, .before = 4, .complete = TRUE), ma20 = slide_dbl(adjusted, ~ mean(.x), .before = 19, .complete = TRUE) )#> # A tsibble: 1,008 x 10 [1D]#> # Key: symbol [4]#> # Groups: symbol [4]#> symbol date open high low close volume adjusted ma5 ma20#> <chr> <date> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>#> 1 AMZN 2016-01-04 656. 658. 628. 637. 9314500 637. NA NA#> 2 AMZN 2016-01-05 647. 647. 628. 634. 5822600 634. NA NA#> 3 AMZN 2016-01-06 622 640. 620. 633. 5329200 633. NA NA#> 4 AMZN 2016-01-07 622. 630 605. 608. 7074900 608. NA NA#> 5 AMZN 2016-01-08 620. 624. 606 607. 5512900 607. 624. NA#> 6 AMZN 2016-01-11 612. 620. 599. 618. 4891600 618. 620. NA#> 7 AMZN 2016-01-12 625. 626. 612. 618. 4724100 618. 617. NA#> 8 AMZN 2016-01-13 621. 621. 579. 582. 7655200 582. 606. NA#> 9 AMZN 2016-01-14 580. 602. 570. 593 7238000 593 603. NA#> 10 AMZN 2016-01-15 572. 585. 565. 570. 7754500 570. 596. NA#> # ℹ 998 more rows>> slide(.x, .f, ..., .before = 0L, .after = 0L, .step = 1L, .complete = FALSE)

FANG_2016 %>% group_by_key() %>% mutate(ma20 = slide_dbl(adjusted, mean, .before = 19, .complete = TRUE)) %>% ggplot(aes(x = date)) + geom_line(aes(y = adjusted)) + geom_line(aes(y = ma20), color = "red", linewidth = 1) + labs(x = "", y = "Adjusted Closing Price") + facet_wrap(vars(symbol), ncol = 2, scales = "free_y") + theme_tq()

>> slide_index(.x, .i, .f, ..., .before = 0L, .after = 0L, .step = 1L,.complete = FALSE) 🆚 slide()

.step = 1L,FANG_2016 %>% group_by_key() %>% mutate( ma5 = slide_dbl(adjusted, mean, .before = 4, .complete = TRUE), ma5d = slide_index_dbl(adjusted, date, mean, .before = 4, .complete = TRUE) # .before = days(4) )#> # A tsibble: 1,008 x 10 [1D]#> # Key: symbol [4]#> # Groups: symbol [4]#> symbol date open high low close volume adjusted ma5 ma5d#> <chr> <date> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>#> 1 AMZN 2016-01-04 656. 658. 628. 637. 9314500 637. NA NA #> 2 AMZN 2016-01-05 647. 647. 628. 634. 5822600 634. NA NA #> 3 AMZN 2016-01-06 622 640. 620. 633. 5329200 633. NA NA #> 4 AMZN 2016-01-07 622. 630 605. 608. 7074900 608. NA NA #> 5 AMZN 2016-01-08 620. 624. 606 607. 5512900 607. 624. 624.#> 6 AMZN 2016-01-11 612. 620. 599. 618. 4891600 618. 620. 611.#> 7 AMZN 2016-01-12 625. 626. 612. 618. 4724100 618. 617. 614.#> 8 AMZN 2016-01-13 621. 621. 579. 582. 7655200 582. 606. 606.#> 9 AMZN 2016-01-14 580. 602. 570. 593 7238000 593 603. 603.#> 10 AMZN 2016-01-15 572. 585. 565. 570. 7754500 570. 596. 596.#> # ℹ 998 more rows>> slide_period(.x, .i, .period, .f, ..., .every = 1L, .origin=NULL, .before=0L, .after=0L, .complete=FALSE)

i <- c(as.Date("2019-08-30") + 0:2, as.Date("2019-11-30") + 0:2)i#> [1] "2019-08-30" "2019-08-31" "2019-09-01" "2019-11-30" "2019-12-01" "2019-12-02"slide_period(i, i, "month", ~ .x, .before = 1)#> [[1]]#> [1] "2019-08-30" "2019-08-31"#> #> [[2]]#> [1] "2019-08-30" "2019-08-31" "2019-09-01"#> #> [[3]]#> [1] "2019-11-30"#> #> [[4]]#> [1] "2019-11-30" "2019-12-01" "2019-12-02">> slide_period(.x, .i, .period, .f, ..., .every = 1L, .origin=NULL, .before=0L, .after=0L, .complete=FALSE)

i <- c(as.Date("2019-08-30") + 0:2, as.Date("2019-11-30") + 0:2)i#> [1] "2019-08-30" "2019-08-31" "2019-09-01" "2019-11-30" "2019-12-01" "2019-12-02"slide_period(i, i, "month", ~ .x, .before = 1)#> [[1]]#> [1] "2019-08-30" "2019-08-31"#> #> [[2]]#> [1] "2019-08-30" "2019-08-31" "2019-09-01"#> #> [[3]]#> [1] "2019-11-30"#> #> [[4]]#> [1] "2019-11-30" "2019-12-01" "2019-12-02"sales <- 1:6sales_df <- tibble(i = i, s = sales)bm_summary <- function(data) { summarise(data, idx = max(i), sales = sum(s))} slide_period_dfr( .x = sales_df, .i = sales_df$i, .period = "month", .before = 1, .f = bm_summary)#> # A tibble: 4 × 2#> idx sales#> <date> <int>#> 1 2019-08-31 3#> 2 2019-09-01 6#> 3 2019-11-30 4#> 4 2019-12-02 15>> hop(.x, .starts, .stops, .f, ...)

jan_1st_2016 <- floor_date( min(FANG_2016$date), "1 month")jan_1st_2017 <- ceiling_date( max(FANG_2016$date), "1 month")dates <- seq(jan_1st_2016, jan_1st_2017, "1 month")(results <- tibble( starts = dates[1:10], stops = dates[4:13] - 1))#> # A tibble: 10 × 2#> starts stops #> <date> <date> #> 1 2016-01-01 2016-03-31#> 2 2016-02-01 2016-04-30#> 3 2016-03-01 2016-05-31#> # ℹ 7 more rows>> hop(.x, .starts, .stops, .f, ...)

jan_1st_2016 <- floor_date( min(FANG_2016$date), "1 month")jan_1st_2017 <- ceiling_date( max(FANG_2016$date), "1 month")dates <- seq(jan_1st_2016, jan_1st_2017, "1 month")(results <- tibble( starts = dates[1:10], stops = dates[4:13] - 1))#> # A tibble: 10 × 2#> starts stops #> <date> <date> #> 1 2016-01-01 2016-03-31#> 2 2016-02-01 2016-04-30#> 3 2016-03-01 2016-05-31#> # ℹ 7 more rowsAMZN <- FANG_2016 %>% filter(symbol == "AMZN")results %>% mutate( total_volume = hop_index_vec( .x = AMZN$volume, .i = AMZN$date, .starts = starts, .stops = stops, .f = sum, .ptype = double() ) )#> # A tibble: 10 × 3#> starts stops total_volume#> <date> <date> <dbl>#> 1 2016-01-01 2016-03-31 348325200#> 2 2016-02-01 2016-04-30 296594000#> 3 2016-03-01 2016-05-31 263042800#> # ℹ 7 more rows4. 案例:上证50成分股

Case: SSE 50

>> 4.1 导入数据

# # 从_中证指数有限公司_官网得到“上证50”成分股列表并下载至本地# SSE50_path <- paste0(# "https://csi-web-dev.oss-cn-shanghai-finance-1-pub.aliyuncs.com/",# "static/html/csindex/public/uploads/file/autofile/cons/000016cons.xls")# download.file(SSE50_path, "data/SSE50cons.xls", mode = "wb")# # # 用readxl包的read_excel()读入(SSE50_cons <- readxl::read_excel( "data/SSE50cons.xls", # 这是我2022年11月下载的成分股列表 range = "E2:F51", col_names = c("stk_cd", "stk_nm") ) %>% mutate(stk_cd = str_c(stk_cd, ".SH")))#> # A tibble: 50 × 2#> stk_cd stk_nm #> <chr> <chr> #> 1 600010.SH 包钢股份#> 2 600690.SH 海尔智家#> 3 600837.SH 海通证券#> # ℹ 47 more rows>> 4.1 导入数据

# 利用WindR包提供的接口从Wind批量下载日行情数据,若同学没有Wind账号,可跳过此步# 安装WindR包:Wind|开始|插件修复|修复R插件 ...if(!file.exists("data/SSE50_close.rds")) { library(WindR) w.start(showmenu = FALSE) bgn <- "20190630"; end <- "20230630" SSE50_close <- SSE50_cons %>% mutate(price = map(stk_cd, w.wsd, "close", bgn, end, 'Priceadj=F')) write_rds(SSE50_close, "data/SSE50_close.rds") w.stop()}#> [1] "Welcome to use WIND Quant API for R (WindR)!"#> [1] "You can use w.menu to help yourself to create commands(WSD,WSS,WST,WSI,WSQ,...)!"#> [1] ""#> [1] "COPYRIGHT (C) 2013-2020 WIND INFORMATION CO., LTD. ALL RIGHTS RESERVED."#> [1] "IN NO CIRCUMSTANCE SHALL WIND BE RESPONSIBLE FOR ANY DAMAGES OR LOSSES CAUSED BY USING WIND QUANT API FOR R."#> $ErrorCode#> [1] 0#> #> $ErrorMsg#> [1] "OK!">> 4.1 导入数据

(SSE50_close <- read_rds("data/SSE50_close.rds"))#> # A tibble: 50 × 3#> stk_cd stk_nm price #> <chr> <chr> <list> #> 1 600010.SH 包钢股份 <named list [3]>#> 2 600690.SH 海尔智家 <named list [3]>#> 3 600837.SH 海通证券 <named list [3]>#> # ℹ 47 more rows# 将w.wsd()返回的列表解嵌套(SSE50 <- SSE50_close %>% unnest_wider(col = price) %>% # -> ErrorCode, Data, Code filter(ErrorCode == 0) %>% unnest(cols = Data) %>% select(stk_cd, stk_nm, date = DATETIME, close = CLOSE))#> # A tibble: 48,600 × 4#> stk_cd stk_nm date close#> <chr> <chr> <date> <dbl>#> 1 600010.SH 包钢股份 2019-07-01 1.69#> 2 600010.SH 包钢股份 2019-07-02 1.67#> 3 600010.SH 包钢股份 2019-07-03 1.65#> # ℹ 48,597 more rows>> 4.2 数据转换

# 根据日收盘价变量close计算日收益率SSE50_ret <- SSE50 %>% group_by(stk_cd, stk_nm) %>% arrange(date, .by_group = TRUE) %>% # 在此非必须,谨慎起见可加上 tq_transmute( select = close, mutate_fun = periodReturn, period = "daily", col_rename = "Ra" )SSE50_ret#> # A tibble: 47,279 × 4#> # Groups: stk_cd, stk_nm [50]#> stk_cd stk_nm date Ra#> <chr> <chr> <date> <dbl>#> 1 600010.SH 包钢股份 2019-07-01 0 #> 2 600010.SH 包钢股份 2019-07-02 -0.0117#> 3 600010.SH 包钢股份 2019-07-03 -0.0118#> # ℹ 47,276 more rows>> 4.3 数据分析

# 构建并计算等权重组合(作为基准组合)的收益率n_stocks <- SSE50_ret %>% pull(stk_cd) %>% unique() %>% length()wts <- rep(1 / n_stocks, times = n_stocks)baseline_ret <- SSE50_ret %>% tq_portfolio( # -> PerformanceAnalytics::Returns.portfolio() assets_col = stk_cd, returns_col = Ra, weights = wts, # 默认等权重,可不设定 col_rename = "Rb", rebalance_on = "months" # ... )baseline_ret#> # A tibble: 972 × 2#> date Rb#> <date> <dbl>#> 1 2019-07-01 0 #> 2 2019-07-02 0.000629#> 3 2019-07-03 -0.0103 #> # ℹ 969 more rows>> 4.3 数据分析

# 合并收益率数据SSE50_baseline <- left_join(SSE50_ret, baseline_ret, by = "date")# 基于CAPM的绩效评价结果SSE50_capm <- SSE50_baseline %>% tq_performance( Ra = Ra, Rb = Rb, performance_fun = table.CAPM ) %>% arrange(desc(AnnualizedAlpha))SSE50_capm#> # A tibble: 50 × 14#> # Groups: stk_cd, stk_nm [50]#> stk_cd stk_nm ActivePremium Alpha AnnualizedAlpha Beta `Beta-` `Beta+` Correlation#> <chr> <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>#> 1 600905.SH 三峡能… 0.245 0.0011 0.323 0.666 0.932 0.192 0.293#> 2 600809.SH 山西汾… 0.255 0.001 0.291 1.16 1.33 0.890 0.492#> 3 601919.SH 中远海… 0.217 0.0009 0.267 1.23 1.40 1.03 0.476#> # ℹ 47 more rows#> # ℹ 5 more variables: `Correlationp-value` <dbl>, InformationRatio <dbl>,#> # `R-squared` <dbl>, TrackingError <dbl>, TreynorRatio <dbl>>> 4.3 数据分析

# 年化Alpha最大的股票tgt_stk <- SSE50_capm$stk_cd[[1]]# 计算动态相关系数tgt_cor <- SSE50_baseline %>% filter(stk_cd %in% tgt_stk) %>% as_tsibble(index = date) %>% # 有助于保证排序正确,且无重复样本 mutate( cor = slide2_dbl( Ra, Rb, ~ cor(.x, .y, use = "pairwise.complete.obs"), .before = 40, .complete = TRUE ) )tgt_cor %>% tail()#> # A tsibble: 6 x 6 [1D]#> # Groups: stk_cd, stk_nm [1]#> stk_cd stk_nm date Ra Rb cor#> <chr> <chr> <date> <dbl> <dbl> <dbl>#> 1 600905.SH 三峡能源 2023-06-21 0 -0.0145 0.416#> 2 600905.SH 三峡能源 2023-06-26 0.00758 -0.0129 0.356#> 3 600905.SH 三峡能源 2023-06-27 0 0.00650 0.358#> # ℹ 3 more rows>> 4.3 数据分析

# 用 dygraphs 包作交互图(其它如 plotly、echarts4r, highcharter、rbokeh 等)library(dygraphs)tgt_cor %>% as_tibble() %>% # 转成tibble timetk::tk_xts(select = cor, date_var = date) %>% # 转成dygraphs支持更好的xts dygraph(main = glue::glue("Correlation between {tgt_stk} and Baseline")) %>% dyAxis("y", label = "Correlation") %>% dyRangeSelector(height = 25)课后作业

1. 根据本讲课程讲义的打印稿,在 📑 qmd中键入并完成代码的运行

2. 浏览(阅读)tidyquant 包配套的七份 📰 vignettes

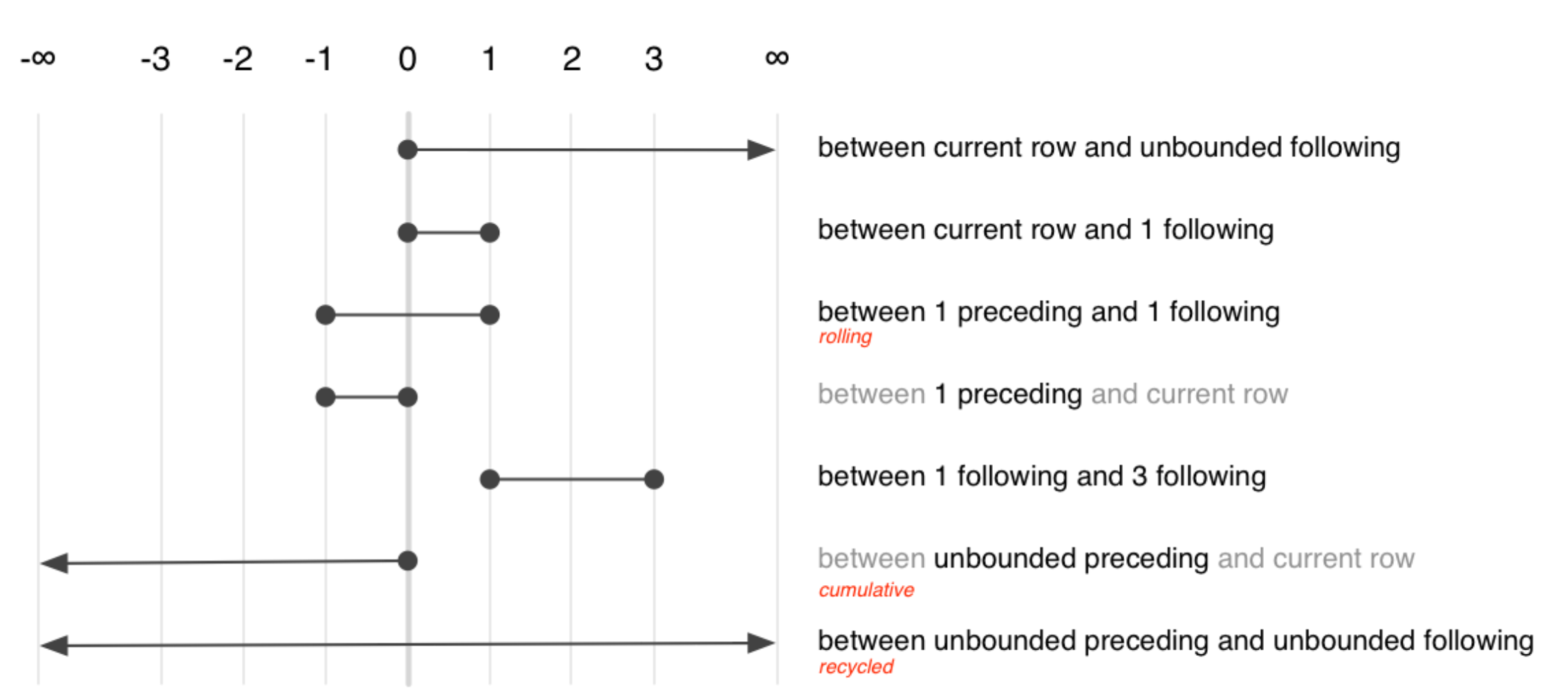

3. 进一步了解 📦 {{slider 包}},想想如何通过参数设定实现以下的移动窗口

# 模拟数据set.seed(123456)tbl <- tibble( Count = runif(16, min=100, max=320) %>% as.integer(), Year = 1997:2012)# slidingtbl %>% mutate(mean = slide_dbl(<...>))# tilingtbl %>% mutate(mean = slide_dbl(<...>))# stretchingtbl %>% ...